Introduction and Context

Transfer learning and domain adaptation are key techniques in the field of machine learning that enable models to leverage knowledge gained from one task or domain to improve performance on a different but related task or domain. Transfer learning, in particular, involves taking a pre-trained model and fine-tuning it for a new task, while domain adaptation focuses on adapting a model trained on one domain (source domain) to perform well on a different domain (target domain). These techniques have become increasingly important as they address the challenge of data scarcity and the need for efficient and effective model training.

The development of transfer learning and domain adaptation has been driven by the need to make machine learning more practical and scalable. Traditional machine learning approaches often require large amounts of labeled data, which can be expensive and time-consuming to collect. Transfer learning and domain adaptation emerged as solutions to this problem, allowing models to benefit from pre-existing knowledge and adapt to new environments with minimal additional training. Key milestones in the development of these techniques include the introduction of pre-trained models like VGGNet and ResNet in computer vision, and BERT and GPT in natural language processing (NLP). These models have demonstrated the power of transfer learning and domain adaptation in various applications, from image classification to text generation.

Core Concepts and Fundamentals

The fundamental principle behind transfer learning is the idea that features learned from one task can be useful for another. For example, a convolutional neural network (CNN) trained on a large dataset like ImageNet learns to recognize low-level features (e.g., edges and textures) and high-level features (e.g., shapes and objects) that are relevant to many other visual tasks. By using these pre-trained features as a starting point, a model can be fine-tuned on a smaller, task-specific dataset, leading to better performance and faster convergence.

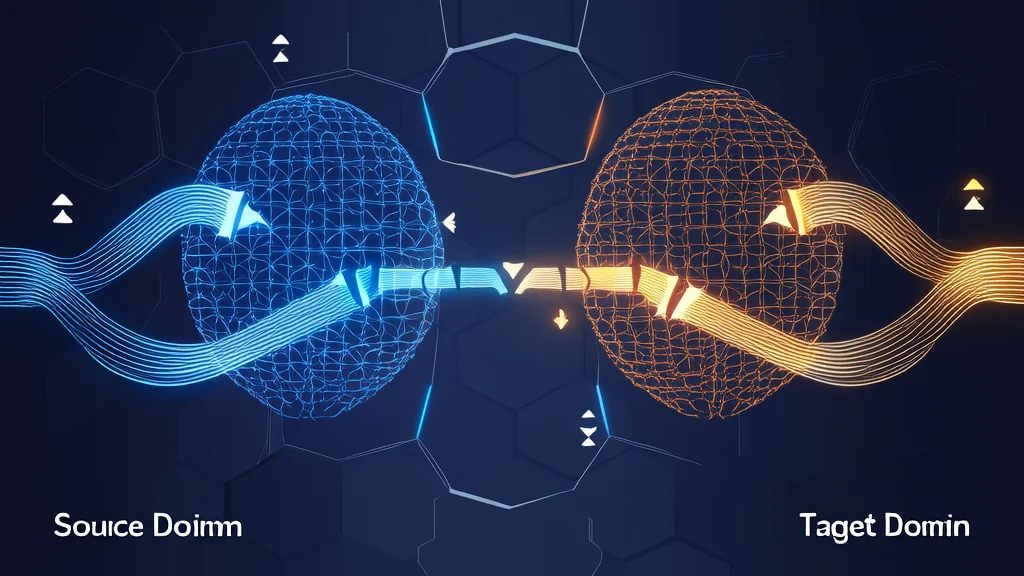

Domain adaptation, on the other hand, addresses the issue of domain shift, where the distribution of the data in the source domain differs from that in the target domain. The goal is to minimize the discrepancy between the two domains so that a model trained on the source domain can generalize well to the target domain. This is achieved through various techniques, such as feature alignment, adversarial training, and re-weighting of samples.

Key mathematical concepts in transfer learning and domain adaptation include feature extraction, distance metrics, and optimization. Feature extraction involves learning a representation of the data that captures the essential characteristics for a given task. Distance metrics, such as Maximum Mean Discrepancy (MMD) and Jensen-Shannon Divergence (JSD), are used to measure the difference between the distributions of the source and target domains. Optimization techniques, such as gradient descent, are used to minimize these distances and align the feature spaces.

Transfer learning and domain adaptation differ from traditional supervised learning in that they do not rely solely on labeled data from the target task or domain. Instead, they leverage the knowledge and features learned from a related task or domain, making them more efficient and effective in scenarios with limited labeled data.

Technical Architecture and Mechanics

Transfer learning typically involves a two-step process: pre-training and fine-tuning. In the pre-training phase, a model is trained on a large, general dataset, such as ImageNet for image classification or Wikipedia for NLP. During this phase, the model learns a set of features that are broadly applicable to the task at hand. In the fine-tuning phase, the pre-trained model is adapted to a specific task by training on a smaller, task-specific dataset. This can involve freezing some layers of the pre-trained model and only updating the weights of the final layers, or fine-tuning all layers with a smaller learning rate.

For instance, in a transformer model like BERT, the pre-training phase involves masked language modeling and next sentence prediction tasks, which help the model learn a rich representation of the input text. During fine-tuning, the model is adapted to a specific NLP task, such as sentiment analysis or named entity recognition, by adding a task-specific output layer and training on a labeled dataset for that task.

Domain adaptation, on the other hand, often involves a more complex architecture designed to handle the domain shift. One common approach is to use a feature extractor (e.g., a CNN or transformer) to map the input data from both the source and target domains into a shared feature space. A domain discriminator is then used to distinguish between the features from the source and target domains. The feature extractor is trained to maximize the confusion of the domain discriminator, effectively aligning the feature distributions of the two domains. This can be achieved using adversarial training, where the feature extractor and domain discriminator are trained in an adversarial manner, similar to the GAN (Generative Adversarial Network) framework.

Another approach to domain adaptation is to use re-weighting techniques, where the importance of each sample in the source domain is adjusted based on its similarity to the target domain. This can be done using importance sampling or re-weighting schemes based on the MMD or JSD between the source and target distributions. The re-weighted samples are then used to train the model, ensuring that it is more focused on the parts of the source domain that are most relevant to the target domain.

Key design decisions in transfer learning and domain adaptation include the choice of pre-trained model, the amount of fine-tuning, and the method of domain alignment. For example, in computer vision, models like VGGNet and ResNet are popular choices for pre-training due to their strong performance on ImageNet. In NLP, BERT and GPT are widely used for their ability to capture contextual information. The amount of fine-tuning depends on the size of the task-specific dataset and the similarity between the pre-training and fine-tuning tasks. Domain alignment methods, such as adversarial training and re-weighting, are chosen based on the nature of the domain shift and the available computational resources.

Advanced Techniques and Variations

Modern variations of transfer learning and domain adaptation have been developed to address specific challenges and improve performance. One such variation is multi-task learning, where a single model is trained to perform multiple related tasks simultaneously. This can lead to better generalization and more efficient use of data. For example, a model trained on multiple NLP tasks, such as sentiment analysis, question answering, and text summarization, can learn a more robust and versatile representation of the input text.

Another advanced technique is unsupervised domain adaptation, where the target domain has no labeled data. This is particularly challenging because the model must learn to adapt without any direct supervision. One approach to unsupervised domain adaptation is to use self-supervised learning, where the model is trained on pretext tasks, such as predicting the rotation of an image or the next word in a sentence. These pretext tasks help the model learn useful features that can be transferred to the target domain.

Recent research has also focused on few-shot and zero-shot domain adaptation, where the target domain has very few or no labeled examples. Few-shot domain adaptation leverages meta-learning techniques, such as MAML (Model-Agnostic Meta-Learning), to learn a model that can quickly adapt to new tasks with only a few examples. Zero-shot domain adaptation, on the other hand, relies on semantic embeddings and prior knowledge to transfer knowledge from the source domain to the target domain without any labeled data.

State-of-the-art implementations of transfer learning and domain adaptation include models like T5 (Text-to-Text Transfer Transformer) and CLIP (Contrastive Language-Image Pre-training). T5 is a unified framework for NLP tasks that uses a transformer architecture and is pre-trained on a large corpus of text. It can be fine-tuned for a wide range of NLP tasks, including translation, summarization, and question answering. CLIP, on the other hand, is a multimodal model that learns to associate images and text by training on a large dataset of image-text pairs. It can be used for zero-shot image classification and other cross-modal tasks.

Practical Applications and Use Cases

Transfer learning and domain adaptation have found widespread application in various fields, including computer vision, natural language processing, and speech recognition. In computer vision, pre-trained models like VGGNet and ResNet are commonly used for image classification, object detection, and segmentation. For example, a model pre-trained on ImageNet can be fine-tuned on a smaller dataset of medical images to detect specific diseases, such as cancer or pneumonia. This approach is particularly useful in medical imaging, where labeled data is often scarce and expensive to obtain.

In natural language processing, models like BERT and GPT are widely used for a variety of tasks, including sentiment analysis, text classification, and machine translation. For instance, BERT can be fine-tuned on a dataset of customer reviews to classify the sentiment of the reviews as positive, negative, or neutral. This is valuable for businesses that want to understand customer feedback and improve their products or services. Similarly, GPT can be fine-tuned on a dataset of legal documents to generate summaries or draft legal briefs, saving time and effort for legal professionals.

Transfer learning and domain adaptation are also used in speech recognition and natural language understanding. Models like Wav2Vec and HuBERT are pre-trained on large datasets of unlabeled audio and can be fine-tuned for tasks such as speech recognition, speaker identification, and emotion recognition. For example, a model pre-trained on a large dataset of English speech can be fine-tuned on a smaller dataset of Spanish speech to improve its performance on Spanish speech recognition tasks.

What makes these techniques suitable for these applications is their ability to leverage pre-existing knowledge and adapt to new tasks with minimal additional training. This leads to better performance, faster convergence, and more efficient use of data. In practice, transfer learning and domain adaptation have been shown to significantly improve the accuracy and robustness of machine learning models, making them indispensable tools in the field of AI.

Technical Challenges and Limitations

Despite their benefits, transfer learning and domain adaptation face several technical challenges and limitations. One of the main challenges is the selection of an appropriate pre-trained model. The choice of pre-trained model can significantly impact the performance of the fine-tuned model, and there is no one-size-fits-all solution. The pre-trained model should be selected based on the similarity between the pre-training and fine-tuning tasks, as well as the availability of computational resources.

Another challenge is the computational requirements of these techniques. Pre-training a large model on a massive dataset requires significant computational resources, including powerful GPUs and large amounts of memory. Fine-tuning and domain adaptation also require substantial computational power, especially when dealing with large and complex models. This can be a barrier for researchers and practitioners with limited access to high-performance computing infrastructure.

Scalability is another issue, particularly in the context of domain adaptation. As the number of domains and tasks increases, the complexity of the adaptation process grows, making it difficult to scale to large and diverse datasets. Additionally, the performance of domain adaptation techniques can degrade if the domain shift is too large or if the target domain is very different from the source domain. This is known as the "domain gap" problem, and it can be challenging to overcome, especially in unsupervised and few-shot settings.

Research directions addressing these challenges include the development of more efficient pre-training and fine-tuning algorithms, the use of model compression and quantization techniques to reduce computational requirements, and the exploration of novel domain adaptation methods that can handle large domain gaps and diverse datasets. For example, recent work on contrastive learning and self-supervised learning has shown promise in improving the efficiency and effectiveness of transfer learning and domain adaptation.

Future Developments and Research Directions

Emerging trends in transfer learning and domain adaptation include the integration of these techniques with other areas of AI, such as reinforcement learning and multimodal learning. For example, transfer learning can be used to initialize the policy in a reinforcement learning agent, allowing it to learn more efficiently and effectively. Multimodal learning, which involves learning from multiple types of data (e.g., images, text, and audio), can benefit from transfer learning and domain adaptation by leveraging pre-trained models from different modalities to improve performance on cross-modal tasks.

Active research directions in this area include the development of more robust and generalizable models that can adapt to a wide range of tasks and domains. This includes the exploration of meta-learning techniques, which aim to learn how to learn, and the use of adaptive and dynamic architectures that can adjust to new tasks and environments. Another area of active research is the development of explainable and interpretable models, which can provide insights into the decision-making process and help build trust in AI systems.

Potential breakthroughs on the horizon include the development of universal models that can perform a wide range of tasks across different domains, and the creation of more efficient and scalable training algorithms that can handle large and diverse datasets. These advancements will likely lead to more practical and effective AI systems that can be deployed in a variety of real-world applications, from healthcare and finance to autonomous vehicles and robotics.

From an industry perspective, the adoption of transfer learning and domain adaptation is expected to grow as more organizations recognize the benefits of these techniques in terms of efficiency, performance, and cost. From an academic perspective, there is a continued focus on advancing the theoretical foundations of these techniques and exploring their applications in new and emerging areas of AI. Overall, the future of transfer learning and domain adaptation looks promising, with the potential to drive significant advancements in the field of AI and beyond.