Introduction and Context

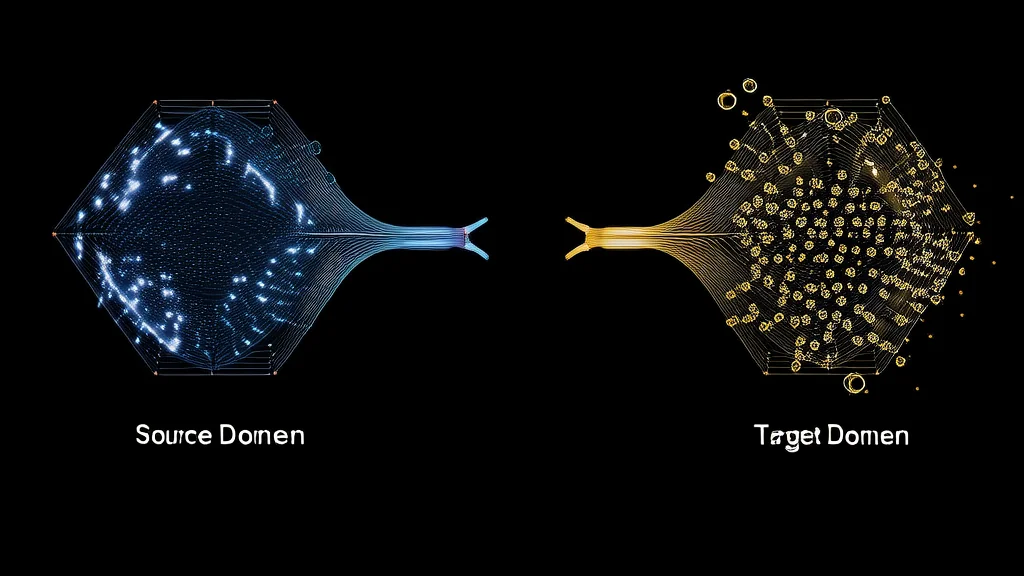

Transfer learning and domain adaptation are key techniques in the field of machine learning, particularly in deep learning, that enable models to leverage knowledge learned from one task or domain and apply it to another. Transfer learning involves taking a pre-trained model on a large dataset (e.g., ImageNet for image classification) and fine-tuning it on a smaller, related dataset. Domain adaptation, a subset of transfer learning, focuses on adapting a model trained on one domain (source domain) to perform well on a different but related domain (target domain). These techniques are crucial for addressing the data scarcity and computational cost issues that often arise in real-world applications.

The importance of transfer learning and domain adaptation cannot be overstated. They have revolutionized the way we approach machine learning problems by significantly reducing the need for large, labeled datasets and extensive training times. The development of these techniques can be traced back to the early 2000s, with significant milestones including the introduction of AlexNet in 2012, which demonstrated the power of pre-trained models in image recognition tasks. Since then, transfer learning and domain adaptation have become fundamental tools in the AI researcher's toolkit, enabling the application of deep learning to a wide range of domains, from natural language processing (NLP) to computer vision.

Core Concepts and Fundamentals

At their core, transfer learning and domain adaptation are based on the principle that knowledge learned from one task or domain can be transferred to another. This is rooted in the idea that many tasks share common underlying features and patterns. For example, a model trained to recognize objects in images can learn general visual features like edges and textures, which are useful for other image-related tasks.

Key mathematical concepts in transfer learning include feature extraction, where the model learns to map input data to a high-level feature space, and fine-tuning, where the pre-trained model is further trained on a new task. In domain adaptation, the focus is on aligning the feature distributions between the source and target domains, often using techniques like adversarial training or domain confusion.

The core components of transfer learning and domain adaptation include the pre-trained model, the source and target datasets, and the adaptation method. The pre-trained model serves as the starting point, providing a rich set of learned features. The source dataset is used to train the initial model, while the target dataset is the new, potentially smaller dataset for which we want to adapt the model. The adaptation method, such as fine-tuning or domain confusion, is used to adjust the model to the new task or domain.

Transfer learning and domain adaptation differ from traditional supervised learning in that they leverage pre-existing knowledge, rather than training a model from scratch. This distinction is crucial, as it allows for more efficient and effective learning, especially when data is limited. An analogy to understand this is to think of transfer learning as a student who has already learned basic arithmetic and can now quickly grasp more advanced math concepts, rather than starting from zero.

Technical Architecture and Mechanics

Transfer learning and domain adaptation involve several key steps and architectural components. The process typically starts with a pre-trained model, which is a neural network that has been trained on a large, diverse dataset. For example, in image classification, a popular pre-trained model is VGG16, which has been trained on the ImageNet dataset. The architecture of VGG16 includes multiple convolutional and fully connected layers, each designed to extract and combine features from the input images.

Once the pre-trained model is selected, the next step is to fine-tune it on the target dataset. Fine-tuning involves unfreezing some of the layers of the pre-trained model and continuing the training process on the new dataset. For instance, in a VGG16 model, the last few fully connected layers might be unfrozen, while the earlier convolutional layers remain frozen. This allows the model to retain the general features it has learned from the source dataset while adapting to the specific characteristics of the target dataset.

In domain adaptation, the goal is to align the feature distributions between the source and target domains. One common approach is to use adversarial training, where a domain discriminator is introduced to distinguish between the source and target features. The main model is then trained to fool the domain discriminator, effectively making the features indistinguishable. For example, in a domain adaptation setup, the feature extractor (e.g., a ResNet model) is shared between the source and target domains, and the domain discriminator is a separate network that tries to classify the features as coming from either the source or target domain.

Another key design decision in domain adaptation is the choice of loss functions. Commonly used loss functions include the domain confusion loss, which encourages the feature distributions to be similar, and the task-specific loss, which ensures that the model performs well on the target task. For instance, in a domain adaptation setup for sentiment analysis, the domain confusion loss might be combined with a cross-entropy loss for the sentiment classification task.

Recent technical innovations in transfer learning and domain adaptation include the use of self-supervised learning for pre-training, which allows models to learn from unlabeled data. For example, the SimCLR framework uses a contrastive loss to learn representations from augmented views of the same image. Additionally, methods like MixStyle and AdaIN have been developed to improve style transfer and domain generalization, respectively. These methods work by normalizing and re-scaling the feature statistics to make them more invariant to domain shifts.

Advanced Techniques and Variations

Modern variations of transfer learning and domain adaptation include multi-task learning, where a single model is trained to perform multiple related tasks simultaneously, and meta-learning, where the model learns to adapt quickly to new tasks with minimal data. Multi-task learning can be particularly effective when the tasks share common features, as it allows the model to learn more robust and generalizable representations. For example, a model trained to jointly perform object detection and segmentation can benefit from the shared feature extraction layers, leading to improved performance on both tasks.

State-of-the-art implementations in transfer learning and domain adaptation often involve complex architectures and sophisticated training techniques. For instance, the BERT (Bidirectional Encoder Representations from Transformers) model, widely used in NLP, is pre-trained on a large corpus of text and fine-tuned on specific tasks like sentiment analysis or question answering. The BERT architecture includes a transformer-based encoder, which captures contextual information from the input text, and is fine-tuned using task-specific layers.

Different approaches to domain adaptation include unsupervised domain adaptation, where no labeled data is available in the target domain, and semi-supervised domain adaptation, where a small amount of labeled data is available. Unsupervised domain adaptation methods, such as DANN (Domain-Adversarial Neural Networks), use adversarial training to align the feature distributions. Semi-supervised methods, like MCD (Maximum Classifier Discrepancy), use a combination of labeled and unlabeled data to improve adaptation. Each approach has its trade-offs: unsupervised methods are more flexible but may require more complex training, while semi-supervised methods can achieve better performance with less data but require some labeled examples.

Recent research developments in this area include the use of generative models, such as GANs (Generative Adversarial Networks), for domain adaptation. GANs can generate synthetic data that mimics the target domain, allowing the model to learn from a more diverse set of examples. For example, the StarGAN framework can generate images with different attributes, such as hairstyles or facial expressions, which can be used to augment the training data for face recognition tasks.

Practical Applications and Use Cases

Transfer learning and domain adaptation find practical applications in a wide range of fields, from healthcare to autonomous vehicles. In medical imaging, for instance, transfer learning is used to adapt models trained on large, publicly available datasets to specific clinical tasks. For example, a model pre-trained on the ChestX-ray14 dataset can be fine-tuned to detect specific types of lung diseases in a hospital's patient data. This approach not only reduces the need for large, labeled datasets but also improves the model's performance on the specific task.

In the automotive industry, domain adaptation is used to improve the performance of self-driving cars in different environments. A model trained on urban driving data can be adapted to perform well in rural or highway settings. For example, the Waymo Driver system uses domain adaptation to handle the variability in road conditions and traffic patterns, ensuring safe and reliable operation across different regions.

These technologies are suitable for these applications because they can leverage the vast amounts of data available in one domain to improve performance in another, where data is scarce. This is particularly important in fields like healthcare, where obtaining large, labeled datasets can be challenging due to privacy and ethical concerns. The performance characteristics of transfer learning and domain adaptation in practice are generally positive, with models achieving higher accuracy and faster convergence compared to training from scratch. However, the success of these techniques depends on the quality of the pre-trained model and the similarity between the source and target domains.

Technical Challenges and Limitations

Despite their benefits, transfer learning and domain adaptation face several technical challenges and limitations. One major challenge is the selection of an appropriate pre-trained model, as the choice can significantly impact the performance on the target task. The pre-trained model must be sufficiently general and relevant to the target task, which can be difficult to determine without extensive experimentation. Additionally, the alignment of feature distributions in domain adaptation can be challenging, especially when the source and target domains have significant differences.

Computational requirements are another limitation, as fine-tuning large pre-trained models can be resource-intensive. This is particularly true for deep neural networks with millions of parameters, which require substantial GPU resources and training time. Scalability is also a concern, as the effectiveness of transfer learning and domain adaptation can diminish as the number of tasks or domains increases. Managing and optimizing the training process for multiple tasks or domains can be complex and requires careful tuning of hyperparameters and training schedules.

Research directions addressing these challenges include the development of more efficient pre-training methods, such as self-supervised learning, and the exploration of lightweight and modular architectures that can be easily adapted to new tasks. Additionally, there is ongoing work on developing more robust and scalable domain adaptation techniques, such as those that can handle multiple domains or tasks simultaneously. These efforts aim to make transfer learning and domain adaptation more accessible and effective for a wider range of applications.

Future Developments and Research Directions

Emerging trends in transfer learning and domain adaptation include the integration of reinforcement learning and the use of more sophisticated generative models. Reinforcement learning can be used to dynamically adapt models to new tasks or environments, allowing for more flexible and adaptive systems. For example, a model trained to play a video game can be adapted to a new level or game environment using reinforcement learning, improving its ability to generalize and perform well in unseen situations.

Active research directions also include the development of more interpretable and explainable models, which can provide insights into how the model is adapting and what features are most important for the target task. This is particularly important in fields like healthcare, where understanding the decision-making process of the model is crucial for building trust and ensuring safety. Additionally, there is growing interest in federated learning, where models are trained collaboratively across multiple devices or organizations, allowing for more secure and privacy-preserving transfer learning and domain adaptation.

Potential breakthroughs on the horizon include the development of universal models that can be adapted to a wide range of tasks and domains with minimal fine-tuning. These models would have the ability to learn general and transferable representations, enabling them to perform well on a variety of tasks without the need for extensive retraining. Industry and academic perspectives on these developments are generally optimistic, with a growing recognition of the importance of transfer learning and domain adaptation in advancing the state of the art in AI and machine learning.