Introduction and Context

Transfer learning and domain adaptation are pivotal techniques in the field of machine learning, particularly in deep learning. Transfer learning involves leveraging a pre-trained model on a large dataset and fine-tuning it for a different but related task. This approach is based on the idea that the features learned by a model on one task can be useful for another task, even if the tasks are not identical. Domain adaptation, on the other hand, is a subset of transfer learning that focuses on adapting a model trained on a source domain to perform well on a target domain with different data distributions.

The importance of these techniques lies in their ability to address the challenges of data scarcity and computational efficiency. In many real-world applications, acquiring large, labeled datasets is expensive and time-consuming. Transfer learning and domain adaptation allow models to generalize better with fewer training examples, making them more practical and cost-effective. These techniques have been developed over the past few decades, with key milestones including the introduction of ImageNet in 2012, which provided a large-scale dataset for pre-training models, and the subsequent development of various domain adaptation methods in the 2010s. The primary problem they solve is the need to adapt models to new, unseen data or tasks without requiring extensive retraining from scratch.

Core Concepts and Fundamentals

The fundamental principle behind transfer learning is the reuse of knowledge. When a model is trained on a large dataset, it learns a set of features that are generally useful for a wide range of tasks. For example, a convolutional neural network (CNN) trained on ImageNet learns to recognize edges, textures, and shapes, which are valuable for many computer vision tasks. By fine-tuning this pre-trained model on a smaller, task-specific dataset, the model can quickly adapt to the new task while retaining the generalizable features learned from the large dataset.

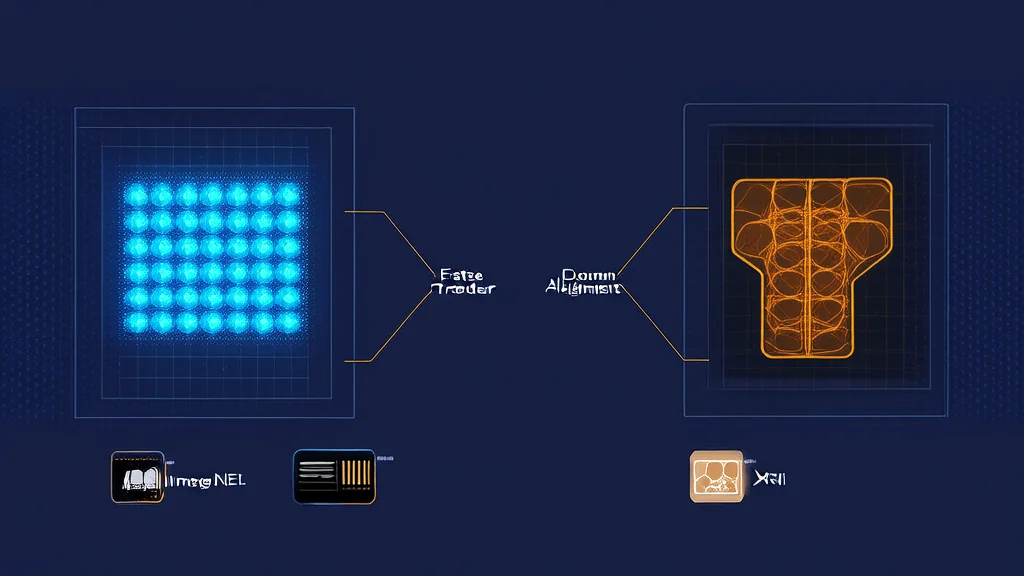

Domain adaptation, on the other hand, addresses the issue of distribution shift. When the source and target domains have different data distributions, a model trained on the source domain may perform poorly on the target domain. Domain adaptation techniques aim to align the feature distributions of the source and target domains, making the model more robust to these differences. Key mathematical concepts include the use of distance metrics such as Maximum Mean Discrepancy (MMD) to measure the difference between distributions, and adversarial training to learn domain-invariant features.

Core components in transfer learning include the pre-trained model, the fine-tuning process, and the task-specific dataset. The pre-trained model serves as a starting point, and the fine-tuning process adjusts the model's parameters to fit the new task. In domain adaptation, additional components such as domain classifiers and adversarial networks are used to ensure that the learned features are invariant to the domain shift.

Transfer learning and domain adaptation differ from traditional supervised learning in that they do not require a large, labeled dataset for the target task. Instead, they leverage the knowledge from a related task or domain, making them more efficient and effective in scenarios with limited data. An analogy to understand this is to think of transfer learning as a student who has already studied a broad subject (e.g., mathematics) and then specializes in a specific area (e.g., calculus) with less effort, while domain adaptation is like a student who adapts their study methods to perform well in a new school with different teaching styles.

Technical Architecture and Mechanics

Transfer learning typically involves a pre-trained model, often a CNN or a transformer, that has been trained on a large, labeled dataset. The architecture of the pre-trained model is designed to extract high-level features from the input data. For instance, in a CNN, the convolutional layers learn to detect edges, textures, and shapes, while the fully connected layers at the end of the network make the final predictions. In a transformer model, the self-attention mechanism calculates the relevance of each token in the input sequence to every other token, allowing the model to capture long-range dependencies and contextual information.

The fine-tuning process in transfer learning involves two main steps: freezing and unfreezing. Initially, the weights of the pre-trained model are frozen, and only the final layers (e.g., the fully connected layers in a CNN) are replaced or modified to fit the new task. The model is then trained on the task-specific dataset, adjusting the weights of the new layers while keeping the pre-trained layers fixed. Once the new layers have converged, the pre-trained layers can be unfrozen, and the entire model is fine-tuned with a lower learning rate to further adapt the features to the new task.

Domain adaptation, on the other hand, often involves more complex architectures. One common approach is to use a domain classifier alongside the main model. The domain classifier is trained to distinguish between the source and target domains, while the main model is trained to minimize the classification error on the source domain and to fool the domain classifier, thus learning domain-invariant features. This adversarial training setup is inspired by Generative Adversarial Networks (GANs) and is known as Domain-Adversarial Neural Networks (DANN).

Another key design decision in domain adaptation is the choice of distance metric to measure the discrepancy between the source and target distributions. MMD is a popular choice, as it provides a principled way to measure the difference between two distributions. The MMD is calculated as the squared difference between the means of the feature representations in the reproducing kernel Hilbert space (RKHS), and it is minimized during training to align the distributions.

Recent technical innovations in domain adaptation include the use of self-supervised learning and unsupervised domain adaptation. Self-supervised learning involves training the model on pretext tasks that do not require labels, such as predicting the rotation of an image or solving jigsaw puzzles. This pre-training step helps the model learn generalizable features that are useful for both the source and target domains. Unsupervised domain adaptation, on the other hand, does not require any labeled data in the target domain, making it more practical in real-world scenarios. Techniques such as CycleGAN and StarGAN have been used to generate synthetic data in the target domain, which can then be used to train the model.

Advanced Techniques and Variations

Modern variations of transfer learning and domain adaptation have been developed to address specific challenges and improve performance. One such variation is multi-source domain adaptation, where the model is adapted to multiple target domains simultaneously. This is particularly useful in scenarios where the target domains are diverse and have different characteristics. Another variation is conditional domain adaptation, where the model is conditioned on additional information, such as the class label or the domain label, to improve the alignment of the feature distributions.

State-of-the-art implementations of transfer learning and domain adaptation include models like T5 (Text-to-Text Transfer Transformer) and DANN. T5 is a transformer-based model that has been pre-trained on a large corpus of text and can be fine-tuned for a wide range of natural language processing tasks. DANN, on the other hand, is a domain adaptation method that uses adversarial training to learn domain-invariant features. Recent research developments have also focused on improving the robustness of these models to domain shifts, such as using meta-learning to adapt the model to new domains with few examples.

Different approaches to domain adaptation have their trade-offs. For example, adversarial training is effective in learning domain-invariant features but can be computationally expensive and difficult to train. Distance-based methods, such as MMD, are simpler and more stable but may not always achieve the same level of performance as adversarial methods. Recent research has also explored the use of contrastive learning, where the model is trained to maximize the similarity between positive pairs (e.g., samples from the same class) and minimize the similarity between negative pairs (e.g., samples from different classes). This approach has shown promising results in both transfer learning and domain adaptation.

Practical Applications and Use Cases

Transfer learning and domain adaptation are widely used in various real-world applications, including computer vision, natural language processing, and speech recognition. In computer vision, pre-trained models like ResNet and VGG are commonly used for tasks such as object detection, image segmentation, and image classification. For example, OpenAI's CLIP (Contrastive Language-Image Pre-training) model uses transfer learning to align visual and textual representations, enabling zero-shot and few-shot learning for a wide range of tasks.

In natural language processing, transformer-based models like BERT and RoBERTa are pre-trained on large corpora and fine-tuned for tasks such as sentiment analysis, named entity recognition, and question answering. Google's BERT, for instance, has been fine-tuned for various NLP tasks, achieving state-of-the-art performance with minimal data. In speech recognition, models like Wav2Vec 2.0, developed by Facebook AI, use self-supervised learning to pre-train on large amounts of unlabeled audio data and are then fine-tuned for tasks such as automatic speech recognition (ASR).

These techniques are suitable for these applications because they enable models to generalize well with limited labeled data, reducing the need for extensive manual annotation. Performance characteristics in practice show that transfer learning and domain adaptation can significantly improve the accuracy and robustness of models, especially in scenarios with data scarcity or domain shifts. For example, in medical imaging, transfer learning has been used to adapt models trained on large, publicly available datasets to specific medical conditions, leading to improved diagnostic accuracy and reduced false positives.

Technical Challenges and Limitations

Despite their advantages, transfer learning and domain adaptation face several technical challenges and limitations. One of the main challenges is the selection of an appropriate pre-trained model. The choice of the pre-trained model depends on the similarity between the source and target tasks, and a mismatch can lead to suboptimal performance. Additionally, the fine-tuning process can be sensitive to hyperparameters, such as the learning rate and the number of layers to unfreeze, making it challenging to find the optimal configuration.

Computational requirements are another significant challenge. Pre-training large models on massive datasets requires substantial computational resources, and fine-tuning these models can also be resource-intensive, especially for large-scale applications. Scalability issues arise when dealing with very large datasets or when the target domain has a vastly different distribution from the source domain. In such cases, the model may struggle to adapt effectively, leading to poor performance.

Research directions addressing these challenges include the development of more efficient pre-training methods, such as sparse training and pruning, which reduce the computational overhead. Additionally, techniques like meta-learning and few-shot learning are being explored to improve the model's ability to adapt to new domains with limited data. Another active area of research is the development of more robust distance metrics and loss functions that can better handle domain shifts and improve the alignment of feature distributions.

Future Developments and Research Directions

Emerging trends in transfer learning and domain adaptation include the integration of multimodal data, the use of self-supervised and unsupervised learning, and the development of more interpretable and explainable models. Multimodal learning, which combines data from multiple sources (e.g., images, text, and audio), is becoming increasingly important, and transfer learning techniques are being extended to handle these complex data types. For example, models like CLIP and DALL-E, developed by OpenAI, use multimodal pre-training to align visual and textual representations, enabling a wide range of cross-modal tasks.

Self-supervised and unsupervised learning are also gaining traction, as they offer a way to pre-train models on large, unlabeled datasets, reducing the reliance on labeled data. Techniques like contrastive learning and generative modeling are being explored to improve the quality of the learned representations. Additionally, there is a growing interest in developing more interpretable and explainable models, as understanding how the model makes decisions is crucial for trust and reliability in real-world applications.

Potential breakthroughs on the horizon include the development of more efficient and scalable pre-training methods, the integration of domain adaptation into reinforcement learning, and the use of transfer learning for lifelong learning, where the model continuously learns and adapts to new tasks over time. Industry and academic perspectives are converging on the need for more robust and flexible models that can handle a wide range of tasks and domains, and ongoing research is expected to drive significant advancements in these areas.