Introduction and Context

Transfer Learning and Domain Adaptation are key techniques in the field of machine learning that enable models trained on one task to be effectively applied to a different but related task. Transfer Learning involves taking a pre-trained model, which has learned useful features from a large dataset, and fine-tuning it for a new, often smaller, dataset. This approach leverages the knowledge gained from the initial training, reducing the need for extensive data and computational resources in the new task. Domain Adaptation, a specific type of transfer learning, focuses on adapting a model to perform well in a new domain where the data distribution differs from the original training data.

The importance of these techniques lies in their ability to address the challenges of data scarcity and computational cost, which are common in many real-world applications. The concept of transfer learning has been around since the 1990s, with early work by researchers like Yoshua Bengio and Yann LeCun. However, it gained significant traction with the advent of deep learning and the availability of large pre-trained models. Key milestones include the development of pre-trained models like VGG, ResNet, and BERT, which have become foundational in various domains. These techniques solve the problem of generalizing models to new tasks or domains, making them more versatile and efficient.

Core Concepts and Fundamentals

At its core, Transfer Learning is based on the idea that a model trained on a large dataset can learn generalizable features that are useful for other tasks. For example, a convolutional neural network (CNN) trained on a large image dataset like ImageNet can learn to recognize edges, textures, and shapes, which are valuable for other image-related tasks. The fundamental principle is to leverage this pre-learned knowledge to improve performance on a new task with limited data.

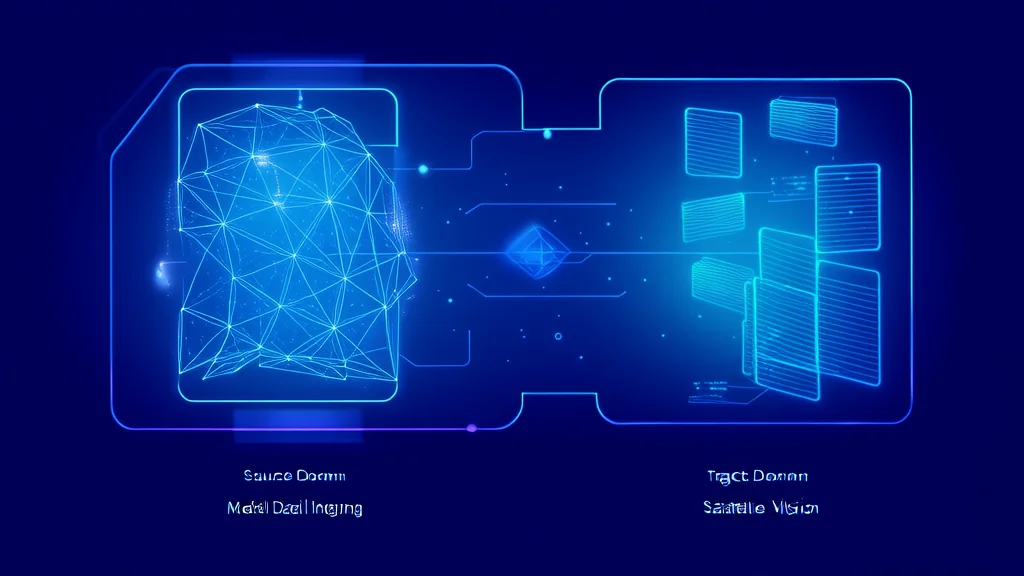

Domain Adaptation, a subset of transfer learning, specifically addresses the issue of domain shift, where the distribution of the input data in the target domain differs from the source domain. The goal is to adapt the model so that it performs well in the new domain without requiring extensive retraining. Key mathematical concepts include feature alignment, where the distributions of the source and target domains are made more similar, and domain-invariant feature extraction, which aims to learn features that are robust to domain changes.

Core components of these techniques include the pre-trained model, the target task, and the adaptation process. The pre-trained model acts as a starting point, providing a rich set of features. The target task is the new problem we want to solve, and the adaptation process involves fine-tuning the model to fit the new task or domain. Transfer Learning and Domain Adaptation differ from traditional supervised learning, where a model is trained from scratch on a specific dataset, and unsupervised learning, which does not require labeled data.

Analogies can help illustrate these concepts. Imagine you are a chef who has mastered cooking Italian cuisine. If you need to start cooking French cuisine, you don't need to relearn everything from scratch; instead, you can use your existing culinary skills and adapt them to the new style. Similarly, a pre-trained model can be adapted to a new task by leveraging its learned features and fine-tuning them for the new context.

Technical Architecture and Mechanics

Transfer Learning and Domain Adaptation involve several steps, each with its own technical intricacies. The process typically starts with selecting a pre-trained model, which is then fine-tuned for the new task. For instance, in a transformer model like BERT, the attention mechanism calculates the relevance of different parts of the input sequence, allowing the model to focus on important features. This mechanism is crucial for understanding the context and meaning of the input data.

The architecture of a transfer learning system can be described as follows:

- Pre-training: A model is trained on a large, diverse dataset, such as ImageNet for images or Wikipedia for text. This step is computationally intensive and requires significant resources.

- Feature Extraction: The pre-trained model is used to extract features from the new dataset. In the case of CNNs, the convolutional layers capture low-level and high-level features, while in transformers, the self-attention mechanism captures contextual information.

- Fine-tuning: The extracted features are used to train a new classifier or regressor for the target task. The parameters of the pre-trained model are updated to better fit the new data. This step is less computationally expensive than pre-training.

- Evaluation: The fine-tuned model is evaluated on a validation set to ensure it generalizes well to unseen data.

In Domain Adaptation, additional steps are taken to align the source and target domain distributions. Techniques like adversarial domain adaptation, where a discriminator is used to distinguish between the source and target domains, and domain-invariant feature learning, where the model is encouraged to learn features that are consistent across domains, are commonly used. For example, in the DANN (Domain-Adversarial Neural Network) architecture, a gradient reversal layer is used to make the feature extractor domain-invariant.

Key design decisions in these architectures include the choice of pre-trained model, the amount of fine-tuning, and the specific adaptation techniques. For instance, using a larger pre-trained model like BERT can provide more powerful features, but it also increases the computational requirements. Fine-tuning all layers of the model can lead to overfitting, especially with small datasets, while only fine-tuning the top layers can limit the model's ability to adapt to the new task. Recent innovations, such as the use of contrastive learning for domain-invariant feature extraction, have shown promising results in improving the robustness of transferred models.

Advanced Techniques and Variations

Modern variations of Transfer Learning and Domain Adaptation include a range of techniques designed to improve performance and robustness. One such technique is Few-Shot Learning, where the model is adapted to a new task with very few examples. This is particularly useful in scenarios where data is scarce. Another advanced technique is Meta-Learning, where the model learns to adapt quickly to new tasks by learning an optimal initialization or learning rate. For example, the MAML (Model-Agnostic Meta-Learning) algorithm trains a model to quickly adapt to new tasks with just a few gradient updates.

State-of-the-art implementations often combine multiple techniques. For instance, the T5 (Text-to-Text Transfer Transformer) model uses a unified text-to-text framework to handle a wide range of NLP tasks, including translation, summarization, and question answering. By framing all tasks as text generation, T5 can leverage the same architecture and pre-training method, making it highly versatile.

Different approaches to Domain Adaptation include Unsupervised Domain Adaptation (UDA), where the target domain has no labeled data, and Semi-Supervised Domain Adaptation (SSDA), where a small amount of labeled data is available in the target domain. UDA techniques, such as DANN and ADDA (Adversarial Discriminative Domain Adaptation), use adversarial training to align the source and target domain distributions. SSDA techniques, like MCD (Maximum Classifier Discrepancy), use multiple classifiers to encourage the model to learn domain-invariant features.

Recent research developments have focused on improving the efficiency and effectiveness of these techniques. For example, the use of self-supervised learning, where the model is trained on pretext tasks to learn generalizable features, has shown promise in reducing the need for labeled data. Additionally, the integration of multi-modal data, such as combining text and images, has led to more robust and versatile models. For instance, the CLIP (Contrastive Language-Image Pre-training) model uses a contrastive loss to align text and image representations, enabling it to perform well on a variety of cross-modal tasks.

Practical Applications and Use Cases

Transfer Learning and Domain Adaptation are widely used in various practical applications, ranging from computer vision to natural language processing. In computer vision, pre-trained models like VGG and ResNet are commonly used for tasks such as object detection, image classification, and semantic segmentation. For example, the Faster R-CNN model, which uses a pre-trained backbone for feature extraction, is widely used for object detection in autonomous driving systems. In NLP, pre-trained models like BERT and RoBERTa are used for tasks such as sentiment analysis, named entity recognition, and text classification. For instance, GPT-3, a large language model, uses transfer learning to generate coherent and contextually relevant text for a wide range of applications, from chatbots to content generation.

These techniques are suitable for these applications because they allow models to leverage the vast amounts of data and computational resources used in pre-training, leading to better performance and faster convergence. For example, in medical imaging, pre-trained models can be fine-tuned on a small dataset of medical images, enabling accurate diagnosis with limited data. In natural language processing, pre-trained models can be adapted to understand and generate text in specific domains, such as legal or medical documents, by fine-tuning on a small corpus of domain-specific text.

In practice, the performance characteristics of these models are often impressive. Pre-trained models can achieve state-of-the-art results with significantly fewer training examples and computational resources compared to models trained from scratch. For instance, a pre-trained BERT model can achieve high accuracy on a text classification task with just a few thousand labeled examples, whereas a model trained from scratch would require millions of examples to reach similar performance. This makes transfer learning and domain adaptation particularly valuable in scenarios where data is limited or expensive to obtain.

Technical Challenges and Limitations

Despite their advantages, Transfer Learning and Domain Adaptation face several technical challenges and limitations. One of the main challenges is the issue of negative transfer, where the pre-trained model's features are not beneficial for the new task and can even degrade performance. This can occur when the source and target tasks or domains are too dissimilar. Careful selection of the pre-trained model and the amount of fine-tuning is crucial to mitigate this risk.

Another challenge is the computational requirements of these techniques. While fine-tuning a pre-trained model is generally less resource-intensive than training from scratch, it still requires significant computational power, especially for large models like BERT or GPT-3. This can be a barrier for organizations with limited resources. Additionally, the need for large pre-trained models can lead to scalability issues, as these models may not fit into memory or may require specialized hardware, such as GPUs or TPUs, to run efficiently.

Research directions addressing these challenges include the development of more efficient pre-training methods, such as sparse and quantized models, which reduce the computational and memory requirements. Another approach is to use knowledge distillation, where a smaller, more efficient model is trained to mimic the behavior of a larger, pre-trained model. This can make the benefits of transfer learning more accessible to a wider range of applications and users.

Future Developments and Research Directions

Emerging trends in Transfer Learning and Domain Adaptation include the development of more robust and adaptable models. One active research direction is the use of meta-learning to create models that can quickly adapt to new tasks with minimal data. This is particularly relevant in dynamic environments where the data distribution can change rapidly. Another trend is the integration of multi-modal data, where models are trained to understand and generate information from multiple types of data, such as text, images, and audio. This can lead to more versatile and context-aware models, capable of handling a wider range of tasks.

Potential breakthroughs on the horizon include the development of more efficient and scalable pre-training methods, such as self-supervised learning, which can reduce the need for labeled data and computational resources. Additionally, the use of reinforcement learning to guide the adaptation process, where the model learns to adapt to new tasks through trial and error, is an exciting area of research. This can lead to more autonomous and adaptive systems, capable of continuously improving their performance in real-world applications.

From an industry perspective, the adoption of transfer learning and domain adaptation is expected to increase, driven by the need for more efficient and effective AI solutions. Companies are increasingly investing in pre-trained models and tools to facilitate the adaptation process, making these techniques more accessible to developers and researchers. From an academic perspective, there is a growing interest in understanding the theoretical foundations of these techniques, such as the conditions under which transfer learning is most effective and the trade-offs between different adaptation methods. This will lead to more principled and reliable approaches to building and deploying AI systems.