Introduction and Context

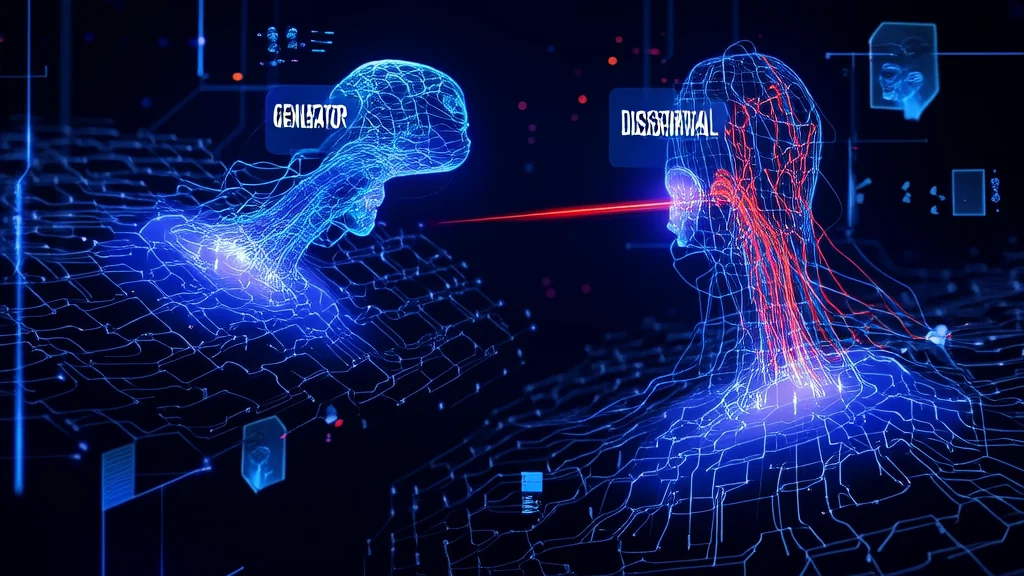

Generative Adversarial Networks (GANs) are a class of machine learning frameworks that have revolutionized the field of generative modeling. GANs consist of two neural networks, a generator and a discriminator, that are trained simultaneously through an adversarial process. The generator creates new data instances, while the discriminator evaluates them for authenticity; i.e., whether they are real or fake. This competitive dynamic drives both networks to improve their performance, leading to the generation of highly realistic synthetic data.

GANs were introduced by Ian Goodfellow and his colleagues in 2014, and since then, they have become a cornerstone in the field of deep learning. Their importance lies in their ability to generate high-quality, diverse, and realistic data, which has applications in various domains such as image synthesis, video generation, and even text and music creation. GANs address the challenge of generating complex, high-dimensional data, which is a significant problem in many areas of artificial intelligence. They have been particularly impactful in fields where large amounts of labeled data are scarce or expensive to obtain.

Core Concepts and Fundamentals

The fundamental principle behind GANs is the adversarial training process, where the generator and discriminator compete against each other. The generator aims to create data that is indistinguishable from real data, while the discriminator tries to correctly classify data as either real or fake. This competition is modeled as a minimax game, where the generator seeks to minimize the discriminator's ability to distinguish between real and fake data, and the discriminator seeks to maximize its accuracy in this task.

Mathematically, the goal of the generator \(G\) is to map a random noise vector \(z\) to a data point \(x\), i.e., \(G(z)\). The discriminator \(D\) is a binary classifier that outputs a probability \(D(x)\) indicating the likelihood that \(x\) is a real data point. The objective function for the GAN can be written as:

\[ \min_G \max_D V(D, G) = \mathbb{E}_{x \sim p_{data}(x)}[\log D(x)] + \mathbb{E}_{z \sim p_z(z)}[\log (1 - D(G(z)))] \]

Here, \(V(D, G)\) is the value function, \(p_{data}(x)\) is the distribution of real data, and \(p_z(z)\) is the prior on input noise variables. The first term encourages the discriminator to assign high probabilities to real data, while the second term encourages it to assign low probabilities to generated data.

The core components of a GAN are the generator and the discriminator. The generator is typically a deep neural network that takes a random noise vector as input and outputs a synthesized data point. The discriminator is another deep neural network that takes a data point as input and outputs a probability score. The generator and discriminator are trained in an alternating fashion: the generator is updated to produce more convincing data, and the discriminator is updated to better distinguish real from fake data.

Compared to other generative models like Variational Autoencoders (VAEs) and Autoregressive models, GANs offer several advantages. GANs do not require explicit density estimation, which makes them more flexible and capable of generating higher-quality data. However, they also come with challenges such as mode collapse, where the generator fails to explore the full space of possible data points, and training instability, which can make them difficult to train effectively.

Technical Architecture and Mechanics

The architecture of a GAN consists of two main components: the generator and the discriminator. The generator \(G\) is a neural network that maps a random noise vector \(z\) to a data point \(x\). The discriminator \(D\) is a neural network that takes a data point \(x\) as input and outputs a scalar value representing the probability that \(x\) is a real data point.

Generator: The generator is typically a deep convolutional neural network (CNN) or a fully connected network. It takes a random noise vector \(z\) as input and generates a data point \(x\). For example, in the case of image generation, the generator might take a 100-dimensional noise vector and output a 64x64 RGB image. The generator's architecture often includes up-sampling layers, such as transposed convolutions, to increase the spatial dimensions of the output.

Discriminator: The discriminator is also a neural network, often a CNN, that takes a data point \(x\) as input and outputs a scalar value. This value represents the probability that \(x\) is a real data point. The discriminator's architecture typically includes down-sampling layers, such as convolutions and pooling, to reduce the spatial dimensions of the input and extract features.

The training process of a GAN involves alternating updates to the generator and the discriminator. The steps are as follows:

- Initialize the Generator and Discriminator: Start with random weights for both the generator and the discriminator.

- Train the Discriminator: Sample a batch of real data points from the training set and a batch of fake data points generated by the generator. Update the discriminator's weights to maximize the log-likelihood of correctly classifying real and fake data.

- Train the Generator: Generate a batch of fake data points using the current generator. Update the generator's weights to minimize the log-likelihood of the discriminator correctly classifying the fake data as fake. This is done by backpropagating the error through the discriminator and into the generator.

- Repeat Steps 2 and 3: Continue alternating between training the discriminator and the generator until convergence or a predefined number of epochs.

Key design decisions in GANs include the choice of loss functions, the architecture of the generator and discriminator, and the training dynamics. For instance, the use of non-saturating loss functions, such as the least squares loss, can help stabilize training and improve the quality of generated data. Additionally, techniques like spectral normalization and gradient penalty regularization can further enhance the stability and performance of GANs.

One of the most significant technical innovations in GANs is the introduction of the Wasserstein GAN (WGAN), which uses the Earth Mover's distance (EMD) as the loss function. The EMD provides a more meaningful and stable measure of the difference between the real and generated distributions, leading to improved training dynamics and higher-quality generated data.

Advanced Techniques and Variations

Since their introduction, GANs have seen numerous advancements and variations aimed at addressing their limitations and improving their performance. One of the most notable modern variants is StyleGAN, developed by NVIDIA. StyleGAN introduces a style-based generator that allows for fine-grained control over the generated images. The generator in StyleGAN is designed to disentangle different aspects of the image, such as texture, shape, and color, allowing for more precise and controllable generation.

Another important variation is the Progressive Growing of GANs (PGGAN), which addresses the issue of generating high-resolution images. PGGAN starts with a low-resolution image and gradually increases the resolution during training, allowing the model to learn increasingly detailed features. This approach significantly improves the quality and diversity of generated images, especially at high resolutions.

Other state-of-the-art implementations include BigGAN, which leverages large-scale datasets and powerful computational resources to generate high-fidelity images. BigGAN uses a large number of parameters and a carefully designed architecture to achieve impressive results, but it also requires significant computational resources and training time.

Recent research developments have focused on improving the stability and diversity of GANs. Techniques such as self-attention mechanisms, which allow the model to focus on specific parts of the input, and contrastive learning, which encourages the model to learn more discriminative features, have shown promising results. Additionally, methods like conditional GANs (cGANs) and cycle-consistent GANs (CycleGANs) have expanded the applicability of GANs to tasks such as image-to-image translation and domain adaptation.

Practical Applications and Use Cases

GANs have found a wide range of practical applications across various domains. In the field of computer vision, GANs are used for image synthesis, super-resolution, and image-to-image translation. For example, the Deepfake technology, which has gained significant attention, uses GANs to generate highly realistic face swaps in videos. In the medical field, GANs are used for data augmentation, where they generate synthetic medical images to augment limited training datasets, improving the performance of diagnostic models.

In the creative arts, GANs are used for generating art, music, and even writing. For instance, the Artbreeder platform uses GANs to create and evolve artistic images, allowing users to blend and modify existing artworks. In the field of natural language processing, GANs have been used for text generation, style transfer, and paraphrasing, although they are less common in this domain compared to other generative models like transformers.

GANs are suitable for these applications because of their ability to generate high-quality, diverse, and realistic data. They can capture complex patterns and structures in the data, making them a powerful tool for tasks that require creativity and high-fidelity output. However, the performance of GANs can vary depending on the specific application and the quality of the training data. In practice, GANs often require careful tuning and significant computational resources to achieve optimal results.

Technical Challenges and Limitations

Despite their many advantages, GANs face several technical challenges and limitations. One of the most significant challenges is mode collapse, where the generator produces a limited variety of outputs, failing to explore the full space of possible data points. This can result in a lack of diversity in the generated data, which is a critical issue in many applications. Another challenge is training instability, where the generator and discriminator fail to converge, leading to oscillations and poor performance. This instability can be exacerbated by the choice of hyperparameters, the architecture of the networks, and the quality of the training data.

Computational requirements are another major limitation of GANs. Training GANs, especially high-resolution image generators, requires significant computational resources, including powerful GPUs and large amounts of memory. This can be a barrier to entry for researchers and practitioners without access to such resources. Additionally, scalability issues arise when trying to scale GANs to larger datasets and more complex tasks, as the training time and memory requirements grow rapidly.

Research directions addressing these challenges include the development of more stable training algorithms, such as the use of gradient penalties and spectral normalization. Techniques like self-attention and contrastive learning are also being explored to improve the quality and diversity of generated data. Additionally, there is ongoing work on developing more efficient and scalable GAN architectures, such as those that leverage parallel computing and distributed training.

Future Developments and Research Directions

Looking ahead, GANs are expected to continue evolving and expanding their impact across various domains. Emerging trends in this area include the integration of GANs with other machine learning paradigms, such as reinforcement learning and meta-learning, to create more robust and adaptable generative models. There is also growing interest in developing GANs that can handle multimodal data, such as text and images, and that can generate coherent and contextually relevant outputs.

Active research directions include the development of more interpretable and controllable GANs, where the generated data can be manipulated and refined based on user inputs. This could lead to applications in interactive content creation, personalized recommendations, and more. Additionally, there is a focus on improving the efficiency and scalability of GANs, making them more accessible and practical for a wider range of applications.

Potential breakthroughs on the horizon include the development of GANs that can generate data with higher levels of complexity and fidelity, such as 3D models and high-definition videos. Industry and academic perspectives suggest that GANs will play a crucial role in advancing the field of generative AI, driving innovation in areas such as virtual reality, augmented reality, and autonomous systems. As the technology continues to evolve, GANs are likely to become an even more integral part of the AI landscape, enabling new and exciting applications that we can only begin to imagine today.