Introduction and Context

Model compression and optimization are critical techniques in the field of artificial intelligence (AI) aimed at reducing the size, computational requirements, and energy consumption of deep learning models. These techniques enable the deployment of AI models on resource-constrained devices such as smartphones, embedded systems, and edge computing platforms. The primary goal is to maintain or even improve the performance of the model while significantly reducing its complexity.

The importance of model compression and optimization has grown with the increasing demand for AI in real-world applications. As AI models, particularly deep neural networks, have become more complex and data-hungry, they have also become more computationally expensive. This has led to a need for efficient methods to deploy these models in environments with limited resources. Key milestones in this area include the development of quantization techniques in the 1980s, pruning methods in the 1990s, and knowledge distillation in the 2000s. These techniques address the technical challenges of deploying large, complex models on devices with limited memory, processing power, and energy.

Core Concepts and Fundamentals

The fundamental principles underlying model compression and optimization are rooted in the idea that many deep learning models contain redundant or unnecessary parameters. By identifying and removing these redundancies, we can create more efficient models without significant loss in performance. Key mathematical concepts include sparsity, which refers to the presence of many zero or near-zero values in the model's parameters, and quantization, which involves representing the model's weights and activations with fewer bits.

Core components of model compression and optimization include:

- Quantization: Reducing the precision of the model's weights and activations, typically from 32-bit floating-point numbers to 8-bit integers or lower.

- Pruning: Removing unimportant or redundant parameters from the model, often by setting them to zero.

- Knowledge Distillation: Training a smaller, more efficient model (student) to mimic the behavior of a larger, more complex model (teacher).

These techniques differ from other related technologies such as model architecture design, which focuses on creating inherently efficient architectures, and hardware acceleration, which aims to speed up computations through specialized hardware. Model compression and optimization, on the other hand, focus on making existing models more efficient post-training.

Analogies can help illustrate these concepts. For example, quantization can be thought of as compressing a high-resolution image into a lower resolution format, where the essential features are preserved but the file size is reduced. Pruning is like editing a long, detailed document to remove unnecessary words and sentences, leaving only the most important information. Knowledge distillation is akin to a master craftsman teaching an apprentice, where the apprentice learns to perform the task almost as well as the master but with less experience and training.

Technical Architecture and Mechanics

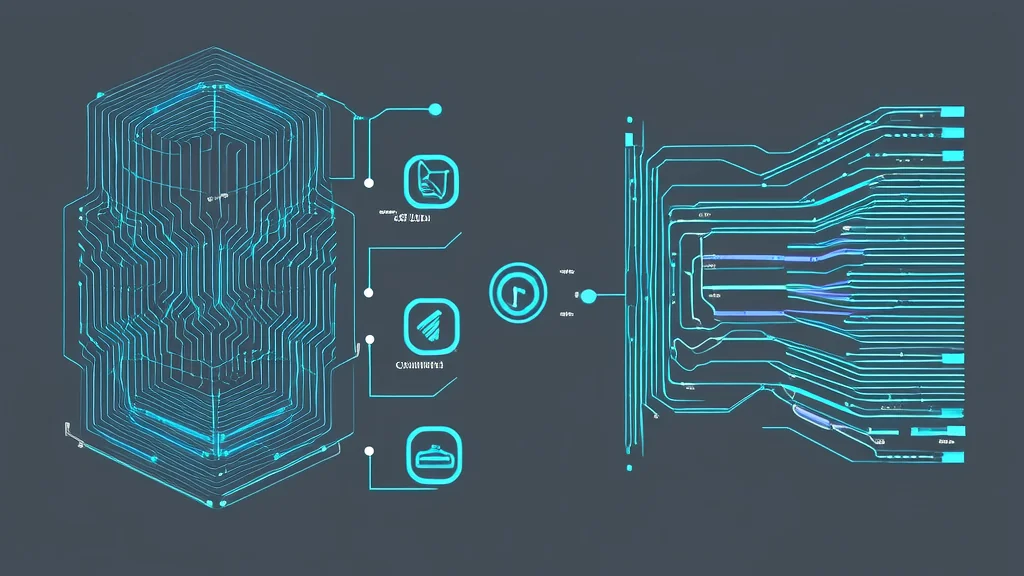

Model compression and optimization involve several steps, each with its own set of techniques and algorithms. Let's delve into the details of each core component.

Quantization is the process of reducing the precision of the model's weights and activations. This can be done using various methods, such as uniform quantization, where the range of values is divided into equal intervals, or non-uniform quantization, where the intervals are adjusted based on the distribution of the values. For instance, in a transformer model, the attention mechanism calculates the relevance of different parts of the input sequence. Quantizing the attention weights can reduce the memory footprint and computational cost of the model. A common approach is to use 8-bit integers instead of 32-bit floating-point numbers, which can result in a 4x reduction in memory usage and faster inference times.

Pruning involves removing unimportant or redundant parameters from the model. This can be done in several ways, such as magnitude-based pruning, where the smallest weights are set to zero, or structured pruning, where entire filters or layers are removed. For example, in a convolutional neural network (CNN), pruning can be applied to the convolutional filters. The process typically involves three steps:

- Training the model: Train the model to convergence using standard techniques.

- Pruning the model: Identify and remove the least important parameters, often by setting them to zero.

- Fine-tuning the model: Retrain the pruned model to recover any lost performance.

Knowledge Distillation involves training a smaller, more efficient model (student) to mimic the behavior of a larger, more complex model (teacher). The student model is trained on a combination of the original training data and the outputs of the teacher model. This allows the student to learn the general patterns and representations that the teacher has learned, even if it has a simpler architecture. For example, in the paper "Distilling the Knowledge in a Neural Network" by Geoffrey Hinton et al., a small student model was trained to match the soft probabilities output by a large teacher model. This resulted in a significant reduction in the number of parameters while maintaining or even improving the performance of the model.

Key design decisions in model compression and optimization include the choice of quantization method, the pruning strategy, and the distillation technique. These decisions are often guided by the specific requirements of the application, such as the available hardware, the desired level of accuracy, and the acceptable trade-offs between model size and performance. Technical innovations in this area include the development of mixed-precision training, where different parts of the model are quantized to different levels of precision, and the use of reinforcement learning to automatically find the best compression strategy.

Advanced Techniques and Variations

Modern variations and improvements in model compression and optimization have led to state-of-the-art implementations. For example, dynamic quantization, where the quantization levels are adjusted during inference, can provide better accuracy than static quantization. Another advanced technique is channel pruning, where entire channels in a CNN are removed, leading to more structured and efficient models. Recent research has also explored the use of neural architecture search (NAS) to automatically discover efficient model architectures that can be further compressed.

Different approaches to model compression and optimization have their own trade-offs. For instance, quantization can lead to a significant reduction in memory usage and computational cost but may introduce some loss in accuracy. Pruning can achieve high levels of sparsity and reduce the number of parameters, but it requires careful fine-tuning to recover performance. Knowledge distillation can produce highly accurate and efficient models but may require a large, pre-trained teacher model, which can be computationally expensive to train.

Recent research developments include the use of low-rank factorization, where the weight matrices of the model are decomposed into lower-rank approximations, and the integration of model compression with federated learning, where models are trained and compressed collaboratively across multiple devices. These advancements aim to make AI models more efficient and scalable, enabling their deployment in a wider range of applications.

Practical Applications and Use Cases

Model compression and optimization are widely used in various practical applications, including mobile and embedded systems, autonomous vehicles, and edge computing. For example, Google's TensorFlow Lite framework provides tools for quantizing and optimizing models for deployment on mobile devices. In the context of autonomous vehicles, NVIDIA's Drive PX platform uses model compression to run deep learning models in real-time, enabling features such as object detection and lane tracking. In edge computing, companies like Amazon and Microsoft offer services that allow users to deploy optimized AI models on edge devices, providing low-latency and high-performance inference.

These techniques are suitable for these applications because they enable the deployment of AI models in environments with limited resources. For instance, in a smartphone, the available memory and processing power are constrained, making it essential to use efficient models. Similarly, in autonomous vehicles, real-time performance is critical, and model compression can help achieve this by reducing the computational requirements of the models. In edge computing, the ability to run AI models locally, without relying on cloud connectivity, is crucial for applications such as industrial automation and smart cities.

Performance characteristics in practice vary depending on the specific application and the chosen compression and optimization techniques. For example, GPT-3, a large language model, uses quantization and pruning to reduce its size and computational requirements, allowing it to be deployed on more resource-constrained devices. Google's TPU (Tensor Processing Unit) accelerators also leverage model compression to optimize the performance of deep learning models, enabling faster and more efficient inference.

Technical Challenges and Limitations

Despite the significant benefits, model compression and optimization face several technical challenges and limitations. One of the main challenges is the trade-off between model size and accuracy. While reducing the precision of the model's weights and activations can lead to significant reductions in memory usage and computational cost, it can also introduce quantization errors that degrade the model's performance. Similarly, pruning can lead to a loss of important information, requiring careful fine-tuning to recover performance.

Another challenge is the computational requirements of the compression and optimization processes themselves. For example, knowledge distillation requires training a large teacher model, which can be computationally expensive. Additionally, the iterative nature of some pruning techniques, such as iterative magnitude pruning, can be time-consuming and resource-intensive.

Scalability is another issue, especially when dealing with very large models and datasets. Compressing and optimizing models with billions of parameters, such as those used in natural language processing and computer vision, can be challenging due to the sheer scale of the problem. Research directions addressing these challenges include the development of more efficient quantization and pruning algorithms, the use of hardware-accelerated training, and the integration of model compression with distributed and federated learning frameworks.

Future Developments and Research Directions

Emerging trends in model compression and optimization include the use of more sophisticated quantization and pruning techniques, the integration of model compression with hardware acceleration, and the development of new distillation methods. Active research directions include the use of reinforcement learning to automatically find the best compression strategy, the exploration of low-rank factorization and tensor decomposition for further model reduction, and the integration of model compression with privacy-preserving techniques such as differential privacy.

Potential breakthroughs on the horizon include the development of fully automated model compression pipelines, where the entire process of training, compressing, and optimizing a model is handled by a single, end-to-end system. This could significantly reduce the time and effort required to deploy efficient AI models. Additionally, the integration of model compression with emerging hardware technologies, such as neuromorphic computing and quantum computing, could lead to new paradigms for efficient and scalable AI.

From an industry perspective, there is a growing demand for tools and frameworks that simplify the process of model compression and optimization. Companies such as Google, Microsoft, and NVIDIA are actively developing and releasing such tools, making it easier for developers to deploy efficient AI models. From an academic perspective, there is a continued focus on understanding the theoretical foundations of model compression and optimization, as well as exploring new and innovative techniques to push the boundaries of what is possible.