Introduction and Context

Transfer learning and domain adaptation are key techniques in the field of machine learning that enable models to leverage pre-existing knowledge to improve performance on new, related tasks. Transfer learning involves taking a model trained on one task and applying it to a different but related task, often with minimal retraining. Domain adaptation, a subset of transfer learning, focuses on adapting a model to perform well on data from a new domain, even if the distribution of the data differs significantly from the original training data.

The importance of these techniques cannot be overstated, especially in the context of deep learning, where models often require large amounts of labeled data to achieve good performance. By leveraging pre-trained models, transfer learning and domain adaptation can significantly reduce the amount of data and computational resources needed for training. These techniques have been developed over the past few decades, with significant milestones including the introduction of fine-tuning in neural networks (e.g., Yosinski et al., 2014) and the development of domain adaptation methods (e.g., Ganin & Lempitsky, 2015). They address the challenge of data scarcity and distribution shift, making it possible to deploy machine learning models in a wide range of real-world applications.

Core Concepts and Fundamentals

The fundamental principle behind transfer learning is the idea that features learned by a model on one task can be useful for another task. For example, a convolutional neural network (CNN) trained on image classification can learn to recognize edges, textures, and shapes, which are also useful for other computer vision tasks like object detection or segmentation. The key mathematical concept here is the representation learning, where the model learns a mapping from input data to a feature space that captures the relevant information for the task at hand.

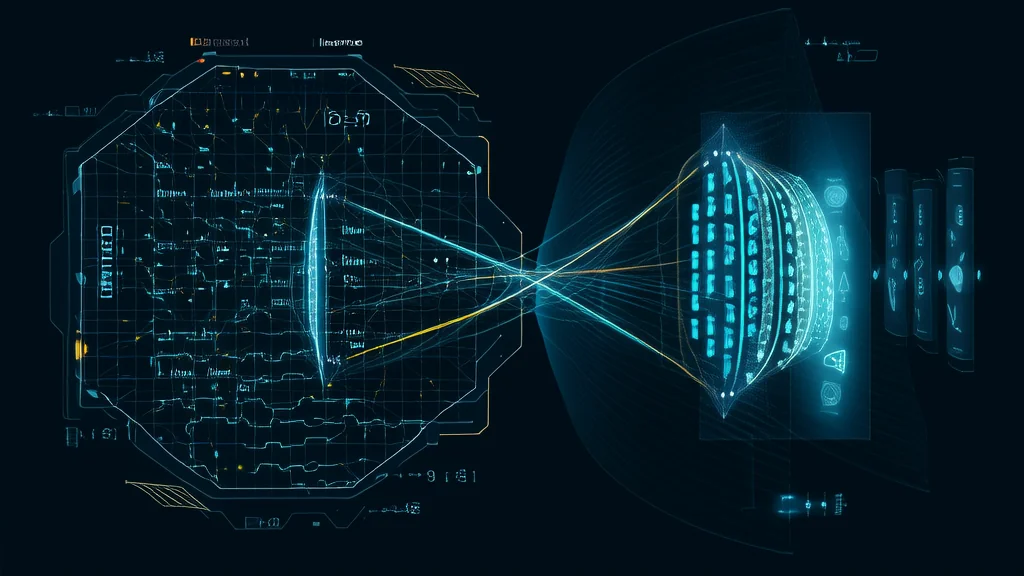

In domain adaptation, the goal is to align the feature distributions between the source and target domains. This is often achieved through techniques such as adversarial training, where a discriminator is trained to distinguish between the source and target domain features, while the feature extractor is trained to confuse the discriminator. Another approach is to use instance weighting, where samples from the source domain are reweighted to better match the target domain distribution.

Core components of transfer learning and domain adaptation include the pre-trained model (or feature extractor), the target task-specific layers, and, in the case of domain adaptation, the domain discriminator. The pre-trained model provides a strong initial set of features, which are then fine-tuned or adapted to the target task. The target task-specific layers are responsible for making the final predictions, while the domain discriminator, if present, helps to minimize the discrepancy between the source and target domain distributions.

Transfer learning and domain adaptation differ from related technologies like multi-task learning and meta-learning. Multi-task learning aims to learn multiple related tasks simultaneously, sharing representations across tasks, while meta-learning focuses on learning how to learn, enabling the model to quickly adapt to new tasks with minimal data. In contrast, transfer learning and domain adaptation focus on leveraging pre-existing knowledge to improve performance on a specific new task or domain.

Technical Architecture and Mechanics

The architecture of a transfer learning system typically consists of a pre-trained model, followed by task-specific layers. For example, in a CNN-based image classification task, the pre-trained model might be a ResNet-50, and the task-specific layers could be a fully connected layer followed by a softmax activation function. The pre-trained model is first frozen, and the task-specific layers are trained on the new dataset. Once the task-specific layers converge, the pre-trained model can be unfrozen, and the entire model can be fine-tuned on the new dataset with a smaller learning rate.

Domain adaptation architectures often include an additional component: the domain discriminator. One common approach is the Domain-Adversarial Neural Network (DANN) (Ganin & Lempitsky, 2015). In DANN, the feature extractor is shared between the source and target domains, and a gradient reversal layer (GRL) is used to invert the gradients flowing from the domain discriminator to the feature extractor. This forces the feature extractor to produce domain-invariant features, which are then used by the task-specific layers to make predictions.

For instance, in a transformer model, the attention mechanism calculates the relevance of each token in the input sequence to every other token. This allows the model to focus on the most relevant parts of the input, which is particularly useful for tasks like natural language processing (NLP). In a domain adaptation setting, the attention mechanism can be used to align the feature distributions between the source and target domains, ensuring that the model can generalize well to the new domain.

Key design decisions in transfer learning and domain adaptation include the choice of pre-trained model, the extent of fine-tuning, and the balance between domain invariance and task-specific performance. For example, using a larger pre-trained model like BERT (Devlin et al., 2018) can provide more expressive features, but may require more computational resources. Fine-tuning the entire model can lead to better performance but may also result in overfitting if the new dataset is small. Balancing these trade-offs is crucial for achieving good performance on the target task or domain.

Recent technical innovations in this area include the use of self-supervised learning for pre-training, which allows models to learn from large amounts of unlabeled data. For example, the SimCLR framework (Chen et al., 2020) uses contrastive learning to learn representations that are robust to various data augmentations. These self-supervised pre-trained models can then be fine-tuned on downstream tasks with much less labeled data, leading to state-of-the-art performance in many domains.

Advanced Techniques and Variations

Modern variations and improvements in transfer learning and domain adaptation include unsupervised domain adaptation, semi-supervised domain adaptation, and multi-source domain adaptation. Unsupervised domain adaptation (UDA) aims to adapt a model to a new domain without any labeled data in the target domain. One popular UDA method is the Maximum Classifier Discrepancy (MCD) (Saito et al., 2017), which uses two classifiers to ensure that the feature extractor produces domain-invariant features. Semi-supervised domain adaptation (SSDA) leverages a small amount of labeled data in the target domain to improve adaptation. For example, the Conditional Domain Adversarial Network (CDAN) (Long et al., 2018) uses a conditional domain discriminator to align the feature distributions conditioned on the class labels.

Multi-source domain adaptation (MSDA) deals with the scenario where there are multiple source domains, and the goal is to adapt to a single target domain. The Moment Matching for Multi-Source Domain Adaptation (M3SDA) (Peng et al., 2019) method matches the moments of the feature distributions across multiple source domains to ensure that the feature extractor produces domain-invariant features. These advanced techniques offer different trade-offs in terms of performance, computational requirements, and the amount of labeled data needed.

Recent research developments in this area include the use of generative models for domain adaptation, such as Generative Adversarial Networks (GANs). For example, the CycleGAN (Zhu et al., 2017) can be used to generate synthetic data in the target domain, which can then be used to train the model. Another recent trend is the use of meta-learning for domain adaptation, where the model learns to adapt to new domains with minimal data. The Model-Agnostic Meta-Learning (MAML) (Finn et al., 2017) framework, for instance, can be used to learn a good initialization for the model parameters, allowing for fast adaptation to new domains.

Comparing different methods, unsupervised domain adaptation is generally more challenging than supervised or semi-supervised domain adaptation, as it requires the model to learn from unlabeled data. However, it is also more practical in many real-world scenarios where labeled data is scarce. Multi-source domain adaptation can be more effective than single-source domain adaptation, as it leverages the diversity of multiple source domains, but it also requires more complex models and training procedures.

Practical Applications and Use Cases

Transfer learning and domain adaptation are widely used in various real-world applications, including computer vision, natural language processing, and speech recognition. For example, in computer vision, pre-trained models like VGG-16 and ResNet-50 are commonly used for tasks such as object detection, image segmentation, and face recognition. Google's TensorFlow Hub provides a wide range of pre-trained models that can be easily fine-tuned for specific tasks, making it accessible for developers and researchers.

In natural language processing, pre-trained models like BERT and RoBERTa are used for tasks such as sentiment analysis, text classification, and question answering. OpenAI's GPT-3, for instance, uses transfer learning to achieve state-of-the-art performance on a wide range of NLP tasks, including translation, summarization, and code generation. These models are pre-trained on large corpora of text and can be fine-tuned on specific tasks with relatively small amounts of labeled data.

What makes transfer learning and domain adaptation suitable for these applications is their ability to leverage pre-existing knowledge, reducing the need for large amounts of labeled data and computational resources. In practice, these techniques have been shown to significantly improve the performance of machine learning models, especially in scenarios where labeled data is limited or expensive to obtain. For example, in medical imaging, transfer learning has been used to detect diseases from X-ray images, where labeled data is often scarce due to privacy and ethical concerns.

Technical Challenges and Limitations

Despite their advantages, transfer learning and domain adaptation face several technical challenges and limitations. One of the main challenges is the selection of the appropriate pre-trained model and the extent of fine-tuning. Choosing the wrong pre-trained model or fine-tuning too much can lead to poor performance on the target task. Additionally, the distribution shift between the source and target domains can be significant, making it difficult to adapt the model effectively. This is particularly challenging in unsupervised domain adaptation, where no labeled data is available in the target domain.

Computational requirements are another limitation, especially for large pre-trained models like BERT and GPT-3. Fine-tuning these models can be computationally expensive and may require specialized hardware like GPUs or TPUs. Scalability is also a concern, as the size of the pre-trained models and the amount of data needed for fine-tuning can grow rapidly with the complexity of the task. This can make it challenging to deploy these models in resource-constrained environments.

Research directions addressing these challenges include the development of more efficient pre-training and fine-tuning methods, the use of lightweight models, and the exploration of unsupervised and semi-supervised learning techniques. For example, the DistilBERT (Sanh et al., 2019) model is a smaller, faster, and cheaper version of BERT, which can be fine-tuned with fewer computational resources. Another direction is the use of self-supervised learning, which allows models to learn from large amounts of unlabeled data, reducing the need for labeled data and computational resources.

Future Developments and Research Directions

Emerging trends in transfer learning and domain adaptation include the integration of multimodal data, the use of reinforcement learning, and the development of more interpretable and explainable models. Multimodal learning, which combines data from multiple modalities (e.g., text, images, and audio), can provide richer and more robust representations, leading to better performance on complex tasks. Reinforcement learning can be used to learn optimal policies for fine-tuning and adaptation, allowing the model to adapt dynamically to new domains and tasks.

Active research directions in this area include the development of more efficient and scalable pre-training and fine-tuning methods, the exploration of zero-shot and few-shot learning, and the integration of domain adaptation with other machine learning paradigms like meta-learning and active learning. Zero-shot and few-shot learning aim to adapt models to new tasks with minimal or no labeled data, which is particularly important in scenarios where data is scarce or expensive to obtain. Meta-learning, on the other hand, focuses on learning how to learn, enabling the model to quickly adapt to new tasks with minimal data.

Potential breakthroughs on the horizon include the development of more generalizable and robust models that can adapt to a wide range of tasks and domains, the use of generative models for domain adaptation, and the integration of transfer learning and domain adaptation with other AI techniques like graph neural networks and transformers. These advancements have the potential to significantly improve the performance and applicability of machine learning models in various real-world scenarios, from healthcare and finance to autonomous systems and robotics.

From an industry perspective, the adoption of transfer learning and domain adaptation is expected to grow as more pre-trained models become available and the computational resources required for fine-tuning and adaptation become more accessible. From an academic perspective, the focus will likely be on developing more efficient and scalable methods, as well as exploring the theoretical foundations of transfer learning and domain adaptation. Overall, the future of transfer learning and domain adaptation looks promising, with the potential to revolutionize the way we build and deploy machine learning models.