Introduction and Context

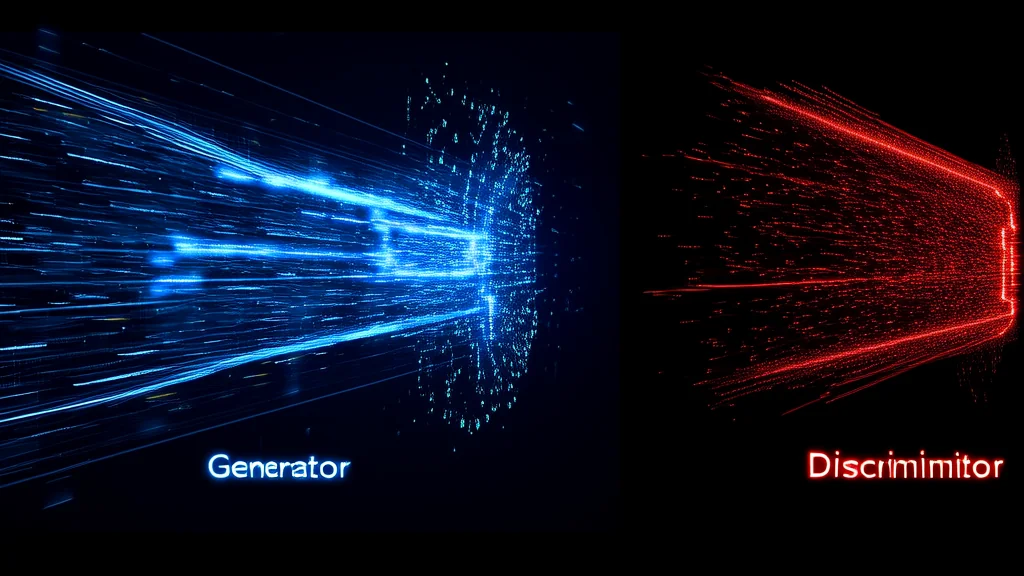

Generative Adversarial Networks (GANs) are a class of machine learning systems that consist of two neural networks, the generator and the discriminator, which are trained simultaneously through adversarial processes. The generator creates data instances, while the discriminator evaluates them for authenticity; i.e., whether they are indistinguishable from real data. This framework was introduced by Ian Goodfellow and his colleagues in 2014, and it has since become a cornerstone in the field of generative modeling.

The importance of GANs lies in their ability to generate highly realistic synthetic data, which can be used in a wide range of applications, from image synthesis and style transfer to data augmentation and even drug discovery. GANs have been particularly influential because they address the challenge of generating high-quality, diverse, and coherent data, which is a fundamental problem in many areas of AI. Key milestones in the development of GANs include the introduction of the original GAN architecture, followed by significant improvements such as the Wasserstein GAN (WGAN) and the Progressive Growing of GANs (PGGAN).

Core Concepts and Fundamentals

The fundamental principle behind GANs is the adversarial training process, where the generator and discriminator engage in a minimax game. The generator aims to produce data that the discriminator cannot distinguish from real data, while the discriminator tries to correctly classify the data as real or fake. This competitive dynamic drives both networks to improve over time, with the generator becoming better at producing realistic data and the discriminator becoming more adept at distinguishing between real and generated data.

Mathematically, the goal of the generator \(G\) is to minimize the probability that the discriminator \(D\) correctly identifies the generated data as fake, while the goal of the discriminator is to maximize this probability. This can be expressed as a minimax optimization problem:

min_G max_D V(D, G) = E[x ~ P_data] [log D(x)] + E[z ~ P_z] [log (1 - D(G(z)))]

Here, \(P_{data}\) is the distribution of the real data, \(P_z\) is the prior on input noise variables, and \(E\) denotes the expectation. The generator \(G\) takes a random noise vector \(z\) and maps it to the data space, while the discriminator \(D\) outputs a scalar representing the probability that the input comes from the real data distribution.

The core components of a GAN are the generator and the discriminator. The generator is typically a deep neural network that learns to map a random noise vector to a data point in the target domain. The discriminator, also a deep neural network, learns to classify data points as either real or generated. The adversarial training process ensures that the generator and discriminator are continuously improving, leading to the generation of increasingly realistic data.

Compared to other generative models like Variational Autoencoders (VAEs), GANs do not require an explicit likelihood function, which makes them more flexible and capable of generating higher-quality data. However, this also means that GANs can be more challenging to train, as they can suffer from issues like mode collapse and vanishing gradients.

Technical Architecture and Mechanics

The architecture of a GAN consists of two main components: the generator and the discriminator. The generator, \(G\), takes a random noise vector \(z\) as input and produces a synthetic data point \(G(z)\). The discriminator, \(D\), takes a data point \(x\) as input and outputs a scalar value representing the probability that \(x\) is from the real data distribution. The training process involves alternating between updating the generator and the discriminator.

Generator: The generator is typically a deep neural network, often a deconvolutional network, that maps a random noise vector \(z\) to a data point in the target domain. For example, in the case of image generation, the generator might take a 100-dimensional noise vector and output a 64x64 RGB image. The architecture of the generator is designed to capture the complex structure of the data, and it often includes layers like convolutional, deconvolutional, and fully connected layers.

Discriminator: The discriminator is also a deep neural network, often a convolutional network, that takes a data point \(x\) as input and outputs a scalar value. This value represents the probability that \(x\) is from the real data distribution. The discriminator is trained to maximize the probability of assigning the correct label to both real and generated data points. The architecture of the discriminator is designed to effectively distinguish between real and generated data, and it often includes layers like convolutional, pooling, and fully connected layers.

Training Process: The training process for GANs is iterative and involves the following steps:

- Sample a batch of real data points \(x\) from the real data distribution \(P_{data}\).

- Sample a batch of random noise vectors \(z\) from the prior distribution \(P_z\).

- Generate a batch of synthetic data points \(G(z)\) using the generator.

- Update the discriminator by maximizing the objective function \(V(D, G)\), which involves increasing the probability of correctly classifying real data points and decreasing the probability of incorrectly classifying generated data points.

- Update the generator by minimizing the objective function \(V(D, G)\), which involves increasing the probability of the discriminator incorrectly classifying generated data points as real.

Key Design Decisions: The design of GANs involves several key decisions, including the choice of loss functions, the architecture of the generator and discriminator, and the training strategy. For example, the original GAN paper used the binary cross-entropy loss, but subsequent work has explored alternative loss functions like the Wasserstein distance, which can lead to more stable training. The architecture of the generator and discriminator is also crucial, with deeper and more complex architectures often leading to better performance. Training strategies, such as using different learning rates for the generator and discriminator, can also impact the stability and quality of the generated data.

Technical Innovations and Breakthroughs: One of the most significant breakthroughs in GAN research was the introduction of the Wasserstein GAN (WGAN) in 2017. WGAN uses the Earth Mover's distance (Wasserstein-1 metric) instead of the Jensen-Shannon divergence, which leads to more stable and meaningful gradients. This innovation has made it easier to train GANs and has led to the generation of higher-quality data. Another important development is the Progressive Growing of GANs (PGGAN), which trains the generator and discriminator progressively, starting with low-resolution images and gradually increasing the resolution. This approach has been shown to produce highly detailed and realistic images.

Advanced Techniques and Variations

Since the introduction of GANs, numerous variations and improvements have been proposed to address the challenges and limitations of the original architecture. Some of the most notable advancements include:

- StyleGAN: Introduced by NVIDIA in 2018, StyleGAN is a state-of-the-art GAN architecture that generates high-quality, diverse, and highly detailed images. It achieves this by disentangling the style and content of the generated images, allowing for fine-grained control over the generated output. StyleGAN uses a mapping network to transform the input noise into a style vector, which is then applied to the synthesis network to generate the final image. This approach has been particularly successful in generating highly realistic and diverse human faces, landscapes, and other complex scenes.

- CycleGAN: CycleGAN is a variant of GANs that is designed for image-to-image translation tasks, where the goal is to learn a mapping from one domain to another without paired training data. For example, CycleGAN can be used to translate images of horses to images of zebras or to convert photos taken in summer to those taken in winter. CycleGAN uses two generators and two discriminators, and it enforces cycle consistency, ensuring that the translated images can be mapped back to the original domain. This approach has been widely used in various applications, including style transfer, image editing, and domain adaptation.

- Conditional GANs (cGANs): Conditional GANs extend the basic GAN framework by conditioning the generation process on additional information, such as class labels or other attributes. This allows for more controlled and targeted generation. For example, a cGAN can be trained to generate images of specific objects, such as cars or dogs, based on the provided class labels. cGANs have been used in a variety of applications, including image synthesis, text-to-image generation, and data augmentation.

These advanced techniques and variations have significantly expanded the capabilities of GANs, making them more versatile and applicable to a wider range of tasks. However, each approach comes with its own trade-offs. For example, StyleGAN provides high-quality and diverse images but requires a large amount of computational resources. CycleGAN is effective for image-to-image translation but may struggle with more complex transformations. cGANs offer more control over the generation process but require labeled data, which can be difficult to obtain in some cases.

Recent research developments in GANs have focused on improving the stability and efficiency of the training process, as well as enhancing the quality and diversity of the generated data. For example, techniques like self-attention mechanisms, spectral normalization, and gradient penalty have been proposed to stabilize the training of GANs. Additionally, there is ongoing work on developing more efficient and scalable GAN architectures, such as lightweight GANs and GANs that can be trained with limited data.

Practical Applications and Use Cases

GANs have found a wide range of practical applications across various domains, including computer vision, natural language processing, and healthcare. In computer vision, GANs are used for image synthesis, style transfer, and data augmentation. For example, StyleGAN is used to generate highly realistic and diverse human faces, which can be used in applications like digital avatars, virtual reality, and video games. CycleGAN is used for image-to-image translation tasks, such as converting satellite images to maps or translating images from one artistic style to another.

In natural language processing, GANs have been used for text-to-image generation, where the goal is to generate images based on textual descriptions. For instance, the StackGAN model can generate images from text descriptions, which has applications in areas like e-commerce, where product images can be generated from textual descriptions. GANs have also been used for text generation, where the generator produces text that is indistinguishable from human-written text. This has applications in areas like chatbots, content generation, and automated writing.

In healthcare, GANs are used for medical image synthesis, data augmentation, and drug discovery. For example, GANs can be used to generate synthetic medical images, which can be used to augment training datasets and improve the performance of medical imaging systems. GANs have also been used to generate molecular structures for drug discovery, where the goal is to design new drugs with specific properties. This has the potential to accelerate the drug discovery process and reduce the cost and time required to develop new drugs.

GANs are suitable for these applications because they can generate high-quality, diverse, and coherent data, which is essential for many real-world tasks. The ability of GANs to learn complex data distributions and generate realistic data makes them a powerful tool for a wide range of applications. However, the performance of GANs in practice depends on factors such as the quality of the training data, the architecture of the generator and discriminator, and the training strategy. Careful design and tuning are often required to achieve optimal results.

Technical Challenges and Limitations

Despite their success, GANs face several technical challenges and limitations that can make them difficult to train and use in practice. One of the main challenges is the instability of the training process. GANs can suffer from issues like mode collapse, where the generator produces only a limited set of outputs, and vanishing gradients, where the gradients become too small to provide useful updates to the generator. These issues can lead to poor quality and lack of diversity in the generated data.

Another challenge is the computational requirements of GANs. Training GANs, especially large and complex models like StyleGAN, can be computationally expensive and time-consuming. This limits their applicability in resource-constrained environments and makes it difficult to scale GANs to larger and more complex datasets. Additionally, GANs often require large amounts of high-quality training data, which can be difficult to obtain in some domains.

Scalability is also a significant issue for GANs. As the size and complexity of the data increase, the training process becomes more challenging, and the risk of overfitting and mode collapse increases. This limits the ability of GANs to handle large and diverse datasets, which is a common requirement in many real-world applications. Research directions addressing these challenges include developing more stable and efficient training algorithms, designing more scalable GAN architectures, and exploring methods for reducing the computational and data requirements of GANs.

Other limitations of GANs include the difficulty of evaluating the quality and diversity of the generated data. Traditional metrics like the Inception Score and Fréchet Inception Distance (FID) have been used to evaluate GANs, but they have limitations and may not always provide a complete picture of the quality and diversity of the generated data. There is ongoing work on developing more robust and comprehensive evaluation metrics for GANs.

Future Developments and Research Directions

The future of GANs is likely to see continued advancements in both the theoretical understanding and practical applications of the technology. Emerging trends in GAN research include the development of more stable and efficient training algorithms, the design of more scalable and flexible GAN architectures, and the exploration of new applications and domains. For example, there is growing interest in using GANs for multimodal data generation, where the goal is to generate data that combines multiple modalities, such as images and text. This has the potential to enable new applications in areas like multimedia content generation and cross-modal retrieval.

Active research directions in GANs include the development of more robust and interpretable GAN architectures, the exploration of unsupervised and semi-supervised learning with GANs, and the integration of GANs with other machine learning techniques, such as reinforcement learning and meta-learning. These efforts aim to address the current limitations of GANs and expand their applicability to a wider range of tasks and domains.

Potential breakthroughs on the horizon include the development of GANs that can generate data with even higher quality and diversity, the creation of more efficient and scalable GAN architectures, and the application of GANs to new and emerging domains, such as robotics, autonomous driving, and personalized medicine. The evolution of GANs is likely to be driven by a combination of theoretical advances, empirical discoveries, and practical innovations, with both industry and academia playing a key role in shaping the future of this exciting and rapidly evolving field.