Introduction and Context

Transfer learning and domain adaptation are key techniques in machine learning that enable the reuse of pre-trained models for new tasks or domains. Transfer learning involves taking a model trained on one task and applying it to a different but related task, while domain adaptation focuses on adapting a model to perform well in a new domain with different data characteristics. These techniques are crucial because they significantly reduce the need for large amounts of labeled data and computational resources, making machine learning more accessible and efficient.

The importance of transfer learning and domain adaptation has grown as the complexity of machine learning models and the volume of data have increased. The concept of transfer learning was first introduced in the 1990s, with significant advancements in the 2010s, particularly with the rise of deep learning. Domain adaptation, on the other hand, gained prominence in the 2000s as researchers sought to address the challenge of deploying models in real-world scenarios where data distributions often differ from the training data. These techniques solve the problem of data scarcity and distribution shift, enabling models to generalize better and perform well in diverse and challenging environments.

Core Concepts and Fundamentals

The fundamental principle behind transfer learning is the idea that features learned from one task can be beneficial for another related task. For example, a model trained to recognize objects in images (e.g., a convolutional neural network) can learn low-level features like edges and textures, which are useful for a wide range of image-related tasks. In domain adaptation, the goal is to adjust the model to handle changes in the data distribution between the source (training) and target (testing) domains. This is achieved by aligning the feature distributions of the source and target domains, ensuring that the model can generalize effectively to the new domain.

Key mathematical concepts in these areas include feature representation, distance metrics, and optimization. Feature representation involves extracting meaningful features from the input data, which can be shared across tasks. Distance metrics, such as the Maximum Mean Discrepancy (MMD), are used to measure the difference between feature distributions. Optimization techniques, like gradient descent, are employed to minimize the discrepancy between the source and target domains. These concepts work together to ensure that the model can leverage its existing knowledge and adapt to new conditions.

Core components of transfer learning and domain adaptation include the pre-trained model, the source and target datasets, and the adaptation algorithm. The pre-trained model serves as the starting point, providing a rich set of learned features. The source dataset is the data used to train the initial model, while the target dataset is the new data to which the model must adapt. The adaptation algorithm is responsible for fine-tuning the model to the new task or domain, often through techniques like fine-tuning, feature alignment, and reweighting.

Transfer learning and domain adaptation differ from traditional supervised learning in that they do not require extensive labeled data for the new task or domain. Instead, they leverage the knowledge from the pre-trained model and adapt it to the new context. This makes them particularly useful in scenarios where labeled data is scarce or expensive to obtain. Analogously, transfer learning can be thought of as a student who has already learned a lot of general knowledge and can quickly pick up new, related subjects, while domain adaptation is like adjusting to a new environment by understanding the local customs and norms.

Technical Architecture and Mechanics

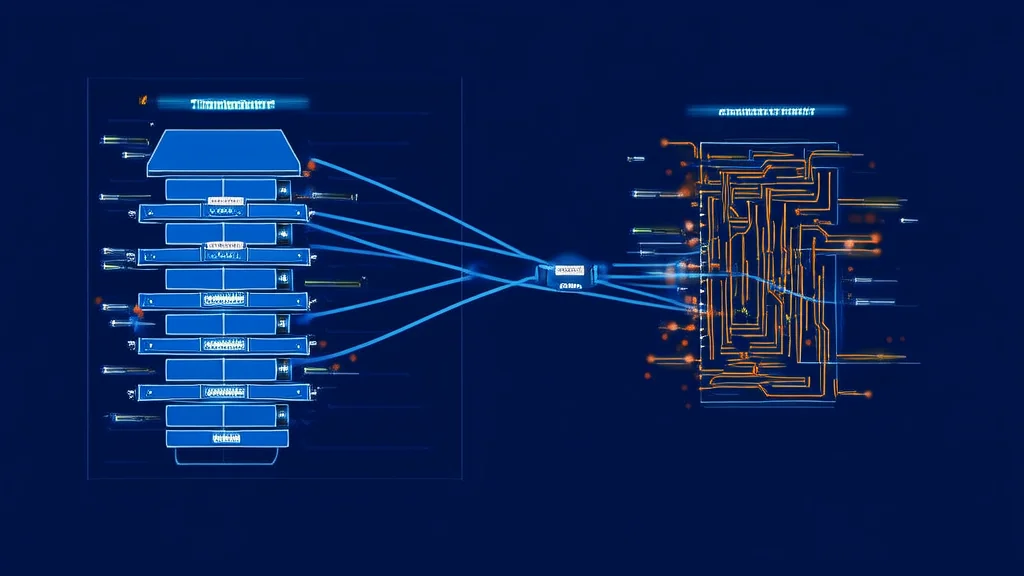

The technical architecture of transfer learning and domain adaptation involves several key steps and components. In transfer learning, the process typically starts with a pre-trained model, which is then fine-tuned on the new task. For example, in a transformer model, the attention mechanism calculates the relevance of different parts of the input sequence, allowing the model to focus on important features. This pre-trained model is then adapted to the new task by updating its parameters using a small amount of labeled data from the new task. The fine-tuning process involves backpropagation and gradient descent to minimize the loss function specific to the new task.

In domain adaptation, the architecture includes additional components to handle the distribution shift between the source and target domains. One common approach is to use a domain discriminator, which is a separate model that tries to distinguish between the source and target data. The main model is trained to fool the domain discriminator, thereby aligning the feature distributions of the two domains. This adversarial training process, known as Domain-Adversarial Training (DAN), was introduced by Yaroslav Ganin and Victor Lempitsky in their 2015 paper "Unsupervised Domain Adaptation by Backpropagation." Another approach is to use domain-invariant feature extraction, where the model learns features that are robust to domain-specific variations. Techniques like Maximum Mean Discrepancy (MMD) and Correlation Alignment (CORAL) are used to minimize the distance between the feature distributions of the source and target domains.

A step-by-step process for domain adaptation might look like this:

- Pre-train a model on the source domain data.

- Extract features from both the source and target domain data using the pre-trained model.

- Use a domain discriminator to classify whether the features come from the source or target domain.

- Train the main model to minimize the task-specific loss while also minimizing the domain discriminator's ability to distinguish between the source and target features.

- Iterate the training process until the model performs well on the target domain.

Key design decisions in these architectures include the choice of pre-trained model, the type of adaptation algorithm, and the balance between task-specific and domain-invariant learning. For instance, using a powerful pre-trained model like BERT for natural language processing tasks can provide a strong foundation, but it also requires careful fine-tuning to avoid overfitting. The choice of adaptation algorithm, such as DAN or MMD, depends on the specific characteristics of the source and target domains. Balancing task-specific and domain-invariant learning is crucial to ensure that the model retains its performance on the new task while adapting to the new domain.

Recent technical innovations in transfer learning and domain adaptation include the use of self-supervised learning for pre-training, which allows models to learn from large, unlabeled datasets. For example, models like SimCLR and BYOL use contrastive learning to learn robust representations without explicit labels. In domain adaptation, techniques like conditional domain adaptation and multi-source domain adaptation have been developed to handle more complex scenarios where the target domain may have multiple sub-domains or where there are multiple source domains available.

Advanced Techniques and Variations

Modern variations and improvements in transfer learning and domain adaptation have led to state-of-the-art implementations that address a wide range of challenges. One such variation is Few-Shot Learning, where the model is adapted to a new task with only a few labeled examples. Techniques like Prototypical Networks and Meta-Learning (e.g., MAML) have been developed to achieve this. In Prototypical Networks, the model learns to classify new examples by comparing them to prototypes (centroids) of the classes in the support set. Meta-Learning, on the other hand, trains the model to learn how to learn, enabling it to quickly adapt to new tasks with minimal data.

Another advanced technique is Unsupervised Domain Adaptation, where the target domain data is unlabeled. Methods like Adversarial Discriminative Domain Adaptation (ADDA) and Deep CORAL have been proposed to handle this scenario. ADDA uses an adversarial framework to align the feature distributions of the source and target domains, while Deep CORAL minimizes the correlation alignment between the two domains. These methods have shown significant improvements in performance, especially in scenarios where labeled target data is unavailable.

Different approaches to transfer learning and domain adaptation have their trade-offs. For example, fine-tuning a pre-trained model is simple and effective but may suffer from overfitting if the new task is very different from the original task. On the other hand, domain adaptation methods like DAN and MMD are more complex but can handle larger distribution shifts. Recent research developments, such as Conditional Domain Adaptation and Multi-Source Domain Adaptuation, have further expanded the capabilities of these techniques. Conditional Domain Adaptation addresses the issue of multiple sub-domains within the target domain, while Multi-Source Domain Adaptation leverages multiple source domains to improve adaptation to the target domain.

Comparing different methods, fine-tuning is generally the most straightforward and widely used, but it may not be sufficient for large distribution shifts. Domain adaptation methods like DAN and MMD are more robust to distribution shifts but require more computational resources and careful tuning. Few-Shot Learning and Meta-Learning are highly effective for tasks with limited data but are more complex to implement and train. The choice of method depends on the specific requirements and constraints of the application, such as the availability of labeled data, the similarity between the source and target domains, and the computational resources available.

Practical Applications and Use Cases

Transfer learning and domain adaptation are widely used in various practical applications, including natural language processing, computer vision, and speech recognition. In natural language processing, models like BERT and RoBERTa are pre-trained on large text corpora and then fine-tuned for specific tasks such as sentiment analysis, named entity recognition, and question answering. For example, GPT-3 uses transfer learning to perform a wide range of language tasks, from generating text to answering questions, by leveraging its pre-trained knowledge and fine-tuning on specific prompts.

In computer vision, transfer learning is used to adapt pre-trained models like VGG, ResNet, and Inception to new image classification, object detection, and segmentation tasks. For instance, Google's TensorFlow Hub provides pre-trained models that can be easily fine-tuned for custom image classification tasks. Domain adaptation is particularly useful in scenarios where the training and testing data come from different distributions, such as in medical imaging, where the model needs to generalize to different types of imaging devices or patient populations.

These techniques are suitable for these applications because they allow models to leverage the vast amounts of data and computational resources used for pre-training, reducing the need for extensive labeled data and training time. Performance characteristics in practice show that transfer learning and domain adaptation can significantly improve the accuracy and robustness of models, especially in scenarios with limited data. For example, in medical imaging, domain adaptation has been used to adapt models trained on high-quality MRI scans to work effectively on lower-quality scans, improving diagnostic accuracy and accessibility.

Technical Challenges and Limitations

Despite their many advantages, transfer learning and domain adaptation face several technical challenges and limitations. One major challenge is the negative transfer, where the pre-trained model's features are not helpful or even detrimental to the new task. This can occur when the source and target tasks or domains are too dissimilar, leading to poor performance. Another challenge is the computational requirements, as fine-tuning and domain adaptation often require significant computational resources, especially for large models and datasets. Scalability is also a concern, as these techniques may not scale well to very large or diverse datasets.

Additionally, domain adaptation faces the challenge of domain shift, where the distribution of the target domain data is significantly different from the source domain. This can lead to poor performance if the model is not adequately adapted. Techniques like domain-invariant feature extraction and adversarial training help mitigate this issue, but they require careful tuning and may not always be effective. Another limitation is the lack of labeled data in the target domain, which is a common scenario in unsupervised domain adaptation. While techniques like MMD and CORAL can help, they may not fully bridge the gap between the source and target domains.

Research directions addressing these challenges include developing more robust and flexible transfer learning and domain adaptation methods, such as adaptive learning rates and dynamic network architectures. Additionally, techniques like self-supervised learning and semi-supervised learning are being explored to reduce the reliance on labeled data. For example, self-supervised learning can be used to pre-train models on large, unlabeled datasets, providing a strong foundation for fine-tuning on specific tasks. Semi-supervised learning combines a small amount of labeled data with a large amount of unlabeled data, helping to improve the model's performance and robustness.

Future Developments and Research Directions

Emerging trends in transfer learning and domain adaptation include the integration of multimodal data, the use of more advanced self-supervised learning techniques, and the development of more efficient and scalable algorithms. Multimodal transfer learning, which involves combining data from multiple modalities (e.g., text, images, and audio), is gaining traction as it can provide richer and more comprehensive representations. For example, models like CLIP (Contrastive Language-Image Pre-training) learn to align text and image representations, enabling them to perform a wide range of cross-modal tasks.

Active research directions include the development of more robust and flexible domain adaptation methods, such as conditional domain adaptation and multi-source domain adaptation. These methods aim to handle more complex and diverse scenarios, such as multiple sub-domains within the target domain or multiple source domains. Additionally, there is a growing interest in online and continual learning, where models can adapt to new tasks and domains in a streaming or incremental manner. This is particularly relevant in real-world applications where data and tasks are constantly changing.

Potential breakthroughs on the horizon include the development of more efficient and interpretable transfer learning and domain adaptation methods. For example, techniques like explainable AI (XAI) can help understand how and why a model adapts to a new task or domain, providing insights into its decision-making process. This can be particularly valuable in critical applications like healthcare and autonomous systems, where transparency and interpretability are essential. Industry and academic perspectives suggest that these techniques will continue to evolve, driven by the need for more efficient, robust, and versatile machine learning solutions.